Kubernetes 如何实现组件的高可用

在 Kubernetes 中,Controller Manager、Scheduler 和用户实现的 Controller 等组件通过多个副本实现高可用。但是,同时工作的多个副本控制器不可避免地会触发它们正在侦听的资源的争用条件,因此通常只有一个副本在多个副本之间工作。

为了避免这种竞争,Kubernetes提供了Leader选举模型,多个副本相互竞争Leader,只有Leader工作,否则等待。在本文中,我们将从Leader选举的原理以及作为用户如何使用它来介绍如何在Kubernetes中实现组件的高可用。

Leader election

Leader选举的原理是利用Lease、ConfigMap、Endpoint资源实现乐观锁,Lease资源定义了Leader的id、抢占时间等信息;ConfigMap 和 Endpointcontrol-plane.alpha/leader在其注释中定义为领导者。是的,是的,如果我们自己实现的话,我们可以定义自己喜欢的字段,但是这里我们实际上是使用resourceVersion来实现乐观锁。

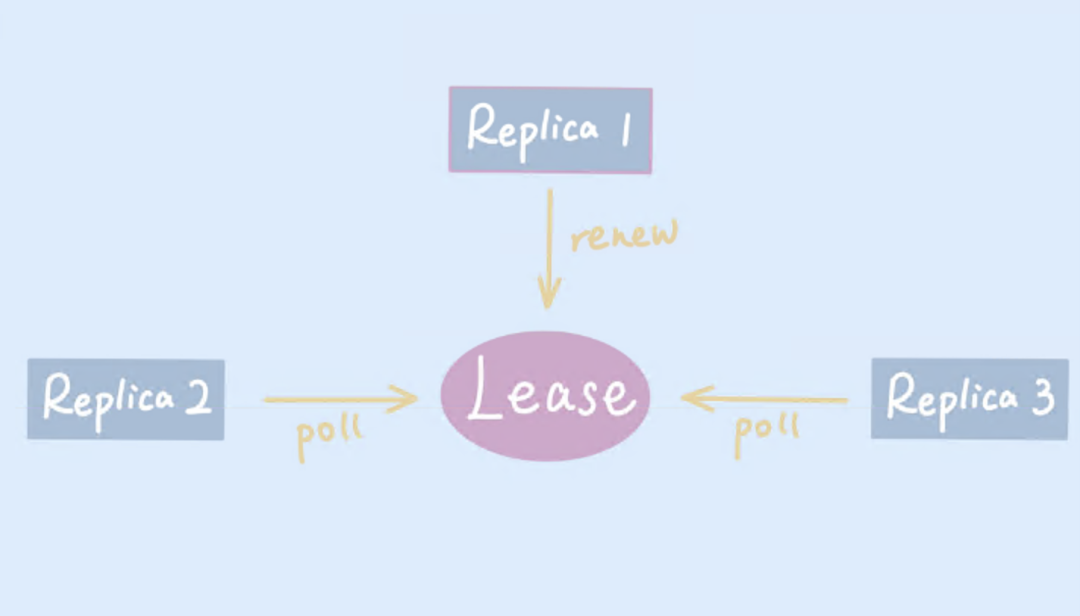

原理如下图所示,多个副本会竞争同一个资源,锁会在被定时更新时成为Leader;如果没有被捕获,它会在原地等待并继续尝试捕获它。

提供了锁的工具方法,client-go直接通过k8s组件使用client-go。接下来,我们分析一下提供的工具方法是如何client-go实现Leader选举的。

抢占锁

首先,它会根据定义的名称获取锁,如果没有则创建;然后判断是否有Leader,以及Leader的租约是否过期,没有就抢锁,否则返回等待。

锁的获取过程中必然会涉及到更新资源的操作,而k8s通过版本号的乐观锁来实现更新操作的原子性。更新资源时,ApiServer 会比较 resourceVersion,如果不一致则返回冲突错误。这样就保证了更新操作的安全性。

抢占锁的代码如下。

func (le *LeaderElector) tryAcquireOrRenew(ctx context.Context) bool {

now := metav1.Now()

leaderElectionRecord := rl.LeaderElectionRecord{

HolderIdentity: le.config.Lock.Identity(),

LeaseDurationSeconds: int(le.config.LeaseDuration / time.Second),

RenewTime: now,

AcquireTime: now,

}

// 1. obtain or create the ElectionRecord

oldLeaderElectionRecord, oldLeaderElectionRawRecord, err := le.config.Lock.Get(ctx)

if err != nil {

if !errors.IsNotFound(err) {

klog.Errorf("error retrieving resource lock %v: %v", le.config.Lock.Describe(), err)

return false

}

if err = le.config.Lock.Create(ctx, leaderElectionRecord); err != nil {

klog.Errorf("error initially creating leader election record: %v", err)

return false

}

le.setObservedRecord(&leaderElectionRecord)

return true

}

// 2. Record obtained, check the Identity & Time

if !bytes.Equal(le.observedRawRecord, oldLeaderElectionRawRecord) {

le.setObservedRecord(oldLeaderElectionRecord)

le.observedRawRecord = oldLeaderElectionRawRecord

}

if len(oldLeaderElectionRecord.HolderIdentity) > 0 &&

le.observedTime.Add(le.config.LeaseDuration).After(now.Time) &&

!le.IsLeader() {

klog.V(4).Infof("lock is held by %v and has not yet expired", oldLeaderElectionRecord.HolderIdentity)

return false

}

// 3. We're going to try to update. The leaderElectionRecord is set to it's default

// here. Let's correct it before updating.

if le.IsLeader() {

leaderElectionRecord.AcquireTime = oldLeaderElectionRecord.AcquireTime

leaderElectionRecord.LeaderTransitions = oldLeaderElectionRecord.LeaderTransitions

} else {

leaderElectionRecord.LeaderTransitions = oldLeaderElectionRecord.LeaderTransitions + 1

}

// update the lock itself

if err = le.config.Lock.Update(ctx, leaderElectionRecord); err != nil {

klog.Errorf("Failed to update lock: %v", err)

return false

}

le.setObservedRecord(&leaderElectionRecord)

return true

}

client-gorepository 提供了一个,example我们启动一个进程后,可以看到 Its Lease 信息如下。

$ kubectl get lease demo -oyaml apiVersion: coordination.k8s.io/v1 kind: Lease metadata: ... spec: acquireTime: "2022-10-12T18:38:41.381108Z" holderIdentity: "1" leaseDurationSeconds: 60 leaseTransitions: 0 renewTime: "2022-10-12T18:38:41.397199Z"

释放锁

释放锁的逻辑是在Leader退出前进行更新操作,清除Lease的leader信息。

func (le *LeaderElector) release() bool {

if !le.IsLeader() {

return true

}

now := metav1.Now()

leaderElectionRecord := rl.LeaderElectionRecord{

LeaderTransitions: le.observedRecord.LeaderTransitions,

LeaseDurationSeconds: 1,

RenewTime: now,

AcquireTime: now,

}

if err := le.config.Lock.Update(context.TODO(), leaderElectionRecord); err != nil {

klog.Errorf("Failed to release lock: %v", err)

return false

}

le.setObservedRecord(&leaderElectionRecord)

return true

}

杀掉上一步启动的进程后,查看它的Lease信息。

$ kubectl get lease demo -oyaml apiVersion: coordination.k8s.io/v1 kind: Lease metadata: ... spec: acquireTime: "2022-10-12T18:41:26.557658Z" holderIdentity: "" leaseDurationSeconds: 1 leaseTransitions: 0 renewTime: "2022-10-12T18:41:26.557658Z"

如何在控制器中使用它?

我们在实现自己的控制器时,通常使用控制器运行时工具,该工具已经封装了leader选举的逻辑。

主要逻辑在两个地方,一个是Lease base信息的定义,根据用户的定义补充base信息,比如当前运行的namespace作为leader的namespace,根据host生成一个随机id等..

func NewResourceLock(config *rest.Config, recorderProvider recorder.Provider, options Options) (resourcelock.Interface, error) {

if options.LeaderElectionResourceLock == "" {

options.LeaderElectionResourceLock = resourcelock.LeasesResourceLock

}

// LeaderElectionID must be provided to prevent clashes

if options.LeaderElectionID == "" {

return nil, errors.New("LeaderElectionID must be configured")

}

// Default the namespace (if running in cluster)

if options.LeaderElectionNamespace == "" {

var err error

options.LeaderElectionNamespace, err = getInClusterNamespace()

if err != nil {

return nil, fmt.Errorf("unable to find leader election namespace: %w", err)

}

}

// Leader id, needs to be unique

id, err := os.Hostname()

if err != nil {

return nil, err

}

id = id + "_" + string(uuid.NewUUID())

// Construct clients for leader election

rest.AddUserAgent(config, "leader-election")

corev1Client, err := corev1client.NewForConfig(config)

if err != nil {

return nil, err

}

coordinationClient, err := coordinationv1client.NewForConfig(config)

if err != nil {

return nil, err

}

return resourcelock.New(options.LeaderElectionResourceLock,

options.LeaderElectionNamespace,

options.LeaderElectionID,

corev1Client,

coordinationClient,

resourcelock.ResourceLockConfig{

Identity: id,

EventRecorder: recorderProvider.GetEventRecorderFor(id),

})

}

二是启动leader选举,注册锁信息、租用时间、回调函数等信息,然后启动选举过程。

func (cm *controllerManager) startLeaderElection(ctx context.Context) (err error) {

l, err := leaderelection.NewLeaderElector(leaderelection.LeaderElectionConfig{

Lock: cm.resourceLock,

LeaseDuration: cm.leaseDuration,

RenewDeadline: cm.renewDeadline,

RetryPeriod: cm.retryPeriod,

Callbacks: leaderelection.LeaderCallbacks{

OnStartedLeading: func(_ context.Context) {

if err := cm.startLeaderElectionRunnables(); err != nil {

cm.errChan <- err

return

}

close(cm.elected)

},

OnStoppedLeading: func() {

if cm.onStoppedLeading != nil {

cm.onStoppedLeading()

}

cm.gracefulShutdownTimeout = time.Duration(0)

cm.errChan <- errors.New("leader election lost")

},

},

ReleaseOnCancel: cm.leaderElectionReleaseOnCancel,

})

if err != nil {

return err

}

// Start the leader elector process

go func() {

l.Run(ctx)

<-ctx.Done()

close(cm.leaderElectionStopped)

}()

return nil

}

使用控制器运行时包装选举逻辑,使用起来要容易得多。我们可以在初始化控制器时定义 Lease 信息。

scheme := runtime.NewScheme()

_ = corev1.AddToScheme(scheme)

// 1. init Manager

mgr, _ := ctrl.NewManager(ctrl.GetConfigOrDie(), ctrl.Options{

Scheme: scheme,

Port: 9443,

LeaderElection: true,

LeaderElectionID: "demo.xxx",

})

// 2. init Reconciler(Controller)

_ = ctrl.NewControllerManagedBy(mgr).

For(&corev1.Pod{}).

Complete(&ApplicationReconciler{})

...

只需使用LeaderElection: true和初始化它LeaderElectionID,租赁的名称,以确保它在集群中是唯一的。信息控制器运行时的其余部分将为您填写。