Kubernetes v1.11.4测试环境集群部署

Kubernetes架构

Kubernetes最初源于谷歌内部的Borg,提供了面向应用的容器集群部署和管理系统。Kubernetes的目标旨在消除编排物理/虚拟计算,网络和存储基础设施的负担,并使应用程序运营商和开发人员完全将重点放在以容器为中心的原语上进行自助运营。Kubernetes 也提供稳定、兼容的基础(平台),用于构建定制化的workflows 和更高级的自动化任务。

Kubernetes 具备完善的集群管理能力,包括多层次的安全防护和准入机制、多租户应用支撑能力、透明的服务注册和服务发现机制、内建负载均衡器、故障发现和自我修复能力、服务滚动升级和在线扩容、可扩展的资源自动调度机制、多粒度的资源配额管理能力。

Kubernetes 还提供完善的管理工具,涵盖开发、部署测试、运维监控等各个环节。

master 的组件有以下:

1. API Server Api server提供HTTP/HTTPS RESTful API,既kubernetes API,API server 是kubernetes Cluster的前端接口,各种客户端工具(CLI或UI)以及kubernetes其他组件可以通过它管理cluster各种资源 2. Scheduler(kube-scheduler) scheduler 负责决定Pod放在哪个node 上运行.scheduler 在调度时会充分考虑cluster 的拓扑结构,当前各个节点的负载,以及应用对高可用、性能、数据亲和性的需求. 3. Controller Manager(kube-controller-manager) Controller Manager 负责管理CLuster 各种资源,保障资源处于预期的状态,Controller Manager 由多种controller 组成,包括replication controller、endpoints controller 、namespace controller、serviceaccounts controller 等。 不同的controller 管理不同的资源,例如,replication controller管理Deployment、SatefulSet、DaemonSet 的生命周期,namespace controller 管理namespace 资源 4. etcd etcd负责保存kubernetes Cluster的配置信息和各种资源的状态信息.当数据发生变化时,etcd快速通知kubernetes 相关组件 5.Pod Pod 要能够相互通信,kubernetes Cluster 必须部署Pod 网络,网络组件可选择fannel calico (kubectl 安装在所有需要进行操作的机器上) 6.master 需要安装的组建 kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubelet,kubeadm

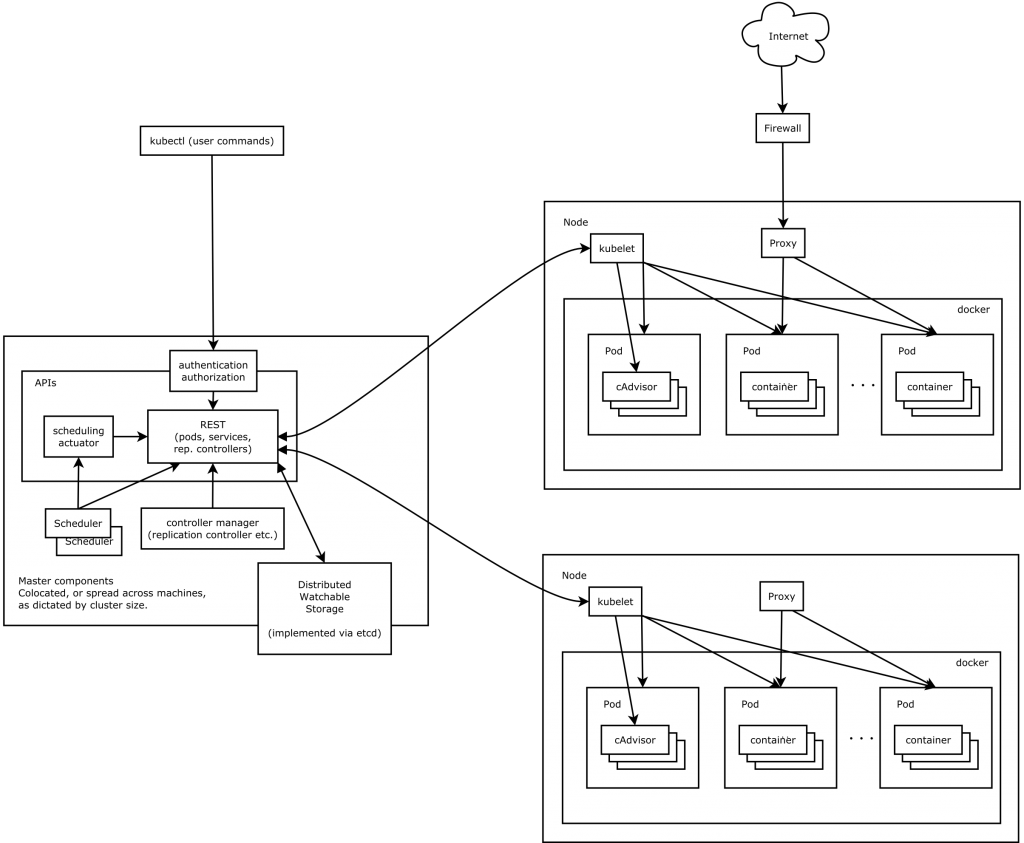

Kubernetes架构

Kubernetes借鉴了Borg的设计理念,比如Pod、Service、Labels和单Pod单IP等。Kubernetes的整体架构跟Borg非常像,如下图所示

Kubernetes主要核心组件组成

- etcd保存了整个集群的状态;

- apiserver提供了资源操作的唯一入口,并提供认证、授权、访问控制、API注册和发现等机制;

- controller manager负责维护集群的状态,比如故障检测、自动扩展、滚动更新等;

- scheduler负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上;

- kubelet负责维护容器的生命周期,同时也负责Volume(CVI)和网络(CNI)的管理;

- Container runtime负责镜像管理以及Pod和容器的真正运行(CRI);

- kube-proxy负责为Service提供cluster内部的服务发现和负载均衡;

除了核心组件,还有一些推荐的Add-ons:

- kube-dns负责为整个集群提供DNS服务

- Ingress Controller为服务提供外网入口

- Heapster提供资源监控

- Dashboard提供GUI

- Federation提供跨可用区的集群

环境介绍:

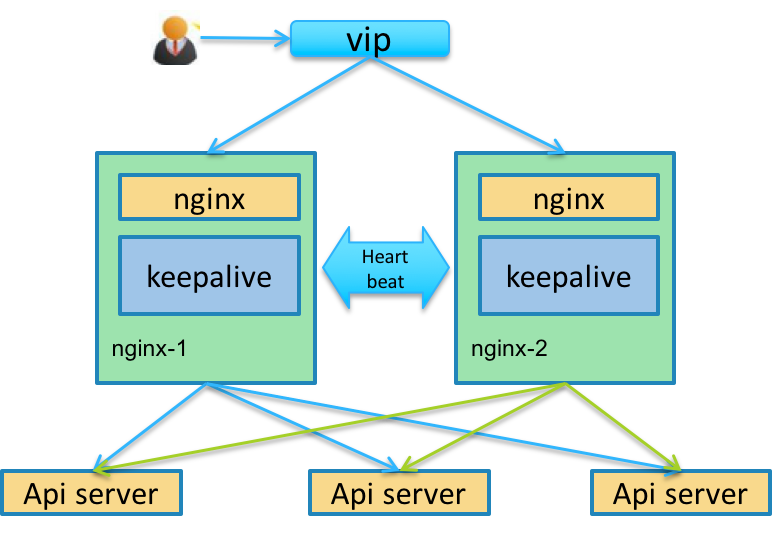

本次部署将模拟kubernetes高可用架构, 其中etcd、apiserver混合部署。 apiserver业务浮动IP地址为192.168.1.15

系统:Centos 7.5 1804 内核:3.10.0-862.el7.x86_64 kubernetes版本:v1.11.4 docker版本: 18.09.0-ce master 192.168.1.16 2核4G 磁盘50G node1 192.168.1.17 8核16G 磁盘200G node2 192.168.1.18 8核16G 磁盘200G node2 192.168.1.19 8核16G 磁盘200G etcd版本:v3.2.22 kube-apiserver与etcd共同部署在同一节点 etcd1 192.168.1.12 4核8G 磁盘50G etcd2 192.168.1.13 4核8G 磁盘50G etcd3 192.168.1.14 4核8G 磁盘50G RBD(Ceph Block Device) | Glusterfs Heketi 注意: 生产环境数据持久化节点, 建议磁盘空间至少在500G以上

一、准备工作

为方便操作,所有操作均以root用户执行 以下操作仅在kubernetes集群节点执行即可

关闭selinux和防火墙

sed -ri 's#(SELINUX=).*#\1disabled#' /etc/selinux/config setenforce 0 systemctl disable firewalld systemctl stop firewalld

注意: 如未关闭防火墙,需要放行以下端口

Master Node Inbound Protocol Port Range Source Purpose TCP 443 Worker Nodes, API Requests, and End-Users Kubernetes API server. UDP 8285 Master & Worker Nodes flannel overlay network - udp backend. This is the default network configuration (only required if using flannel) UDP 8472 Master & Worker Nodes flannel overlay network - vxlan backend (only required if using flannel) Worker Node Inbound Protocol Port Range Source Purpose TCP 10250 Master Nodes Worker node Kubelet API for exec and logs. TCP 10255 Heapster Worker node read-only Kubelet API. TCP 30000-32767 External Application Consumers Default port range for external service ports. Typically, these ports would need to be exposed to external load-balancers, or other external consumers of the application itself. TCP ALL Master & Worker Nodes Intra-cluster communication (unnecessary if vxlan is used for networking) UDP 8285 Master & Worker Nodes flannel overlay network - udp backend. This is the default network configuration (only required if using flannel) UDP 8472 Master & Worker Nodes flannel overlay network - vxlan backend (only required if using flannel) TCP 179 Worker Nodes Calico BGP network (only required if the BGP backend is used) etcd Node Inbound Protocol Port Range Source Purpose TCP 2379-2380 Master Nodes etcd server client API TCP 2379-2380 Worker Nodes etcd server client API (only required if using flannel or Calico).

关闭swap

swapoff -a

配置转发相关参数,否则可能会出错

cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 vm.swappiness=0 EOF sysctl --system

加载ipvs模块, 如果master节点不需要调度。 则无需安装ipvs

cat << EOF > /etc/sysconfig/modules/ipvs.modules #!/bin/bash ipvs_modules_dir="/usr/lib/modules/\`uname -r\`/kernel/net/netfilter/ipvs" for i in \`ls \$ipvs_modules_dir | sed -r 's#(.*).ko.xz#\1#'\`; do /sbin/modinfo -F filename \$i &> /dev/null if [ \$? -eq 0 ]; then /sbin/modprobe \$i fi done EOF chmod +x /etc/sysconfig/modules/ipvs.modules bash /etc/sysconfig/modules/ipvs.modules

安装cfssl, 为集群生成证书文件

#在master节点安装即可!!! wget -O /bin/cfssl https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget -O /bin/cfssljson https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget -O /bin/cfssl-certinfo https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 for cfssl in `ls /bin/cfssl*`;do chmod +x $cfssl;done;

安装docker并干掉docker0网桥

非必要操作, 可忽略

yum install -y yum-utils device-mapper-persitent-data lvm2

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum install -y docker-ce

mkdir /etc/docker/

cat << EOF > /etc/docker/daemon.json

{ "registry-mirrors": ["https://registry.docker-cn.com"],

"live-restore": true,

"default-shm-size": "128M",

"bridge": "none",

"max-concurrent-downloads": 10,

"oom-score-adjust": -1000,

"debug": false

}

EOF

systemctl restart docker

#重启后执行ip a命令,看不到docker0的网卡即可

二、安装etcd集群

部署节点设备列表

etcd1 192.168.1.12

etcd2 192.168.1.13

etcd3 192.168.1.14

准备etcd证书

在master节点上操作

mkdir -pv $HOME/ssl && cd $HOME/ssl

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

cat > etcd-ca-csr.json << EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HangZhou",

"L": "ZheJiang",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

EOF

cat > etcd-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.1.12",

"192.168.1.13",

"192.168.1.14",

"192.168.1.15"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HangZhou",

"L": "ZheJiang",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

EOF

生成证书并复制证书至其他etcd节点(master节点操作)

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare etcd-ca cfssl gencert -ca=etcd-ca.pem -ca-key=etcd-ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd mkdir -pv /etc/etcd/ssl cp etcd*.pem /etc/etcd/ssl scp -r /etc/etcd 192.168.1.12:/etc/ scp -r /etc/etcd 192.168.1.13:/etc/ scp -r /etc/etcd 192.168.1.14:/etc/

etcd1主机安装并启动etcd(192.168.1.12)

yum install -y etcd cat << EOF > /etc/etcd/etcd.conf #[Member] #ETCD_CORS="" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" #ETCD_WAL_DIR="" ETCD_LISTEN_PEER_URLS="https://192.168.1.12:2380" ETCD_LISTEN_CLIENT_URLS="https://127.0.0.1:2379,https://192.168.1.12:2379" #ETCD_MAX_SNAPSHOTS="5" #ETCD_MAX_WALS="5" ETCD_NAME="etcd1" #ETCD_SNAPSHOT_COUNT="100000" #ETCD_HEARTBEAT_INTERVAL="100" #ETCD_ELECTION_TIMEOUT="1000" #ETCD_QUOTA_BACKEND_BYTES="0" #ETCD_MAX_REQUEST_BYTES="1572864" #ETCD_GRPC_KEEPALIVE_MIN_TIME="5s" #ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s" #ETCD_GRPC_KEEPALIVE_TIMEOUT="20s" # #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.12:2380" ETCD_ADVERTISE_CLIENT_URLS="https://127.0.0.1:2379,https://192.168.1.12:2379" #ETCD_DISCOVERY="" #ETCD_DISCOVERY_FALLBACK="proxy" #ETCD_DISCOVERY_PROXY="" #ETCD_DISCOVERY_SRV="" ETCD_INITIAL_CLUSTER="etcd1=https://192.168.1.12:2380,etcd2=https://192.168.1.13:2380,etcd3=https://192.168.1.14:2380" ETCD_INITIAL_CLUSTER_TOKEN="BigBoss" #ETCD_INITIAL_CLUSTER_STATE="new" #ETCD_STRICT_RECONFIG_CHECK="true" #ETCD_ENABLE_V2="true" # #[Proxy] #ETCD_PROXY="off" #ETCD_PROXY_FAILURE_WAIT="5000" #ETCD_PROXY_REFRESH_INTERVAL="30000" #ETCD_PROXY_DIAL_TIMEOUT="1000" #ETCD_PROXY_WRITE_TIMEOUT="5000" #ETCD_PROXY_READ_TIMEOUT="0" # #[Security] ETCD_CERT_FILE="/etc/etcd/ssl/etcd.pem" ETCD_KEY_FILE="/etc/etcd/ssl/etcd-key.pem" #ETCD_CLIENT_CERT_AUTH="false" ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/etcd-ca.pem" #ETCD_AUTO_TLS="false" ETCD_PEER_CERT_FILE="/etc/etcd/ssl/etcd.pem" ETCD_PEER_KEY_FILE="/etc/etcd/ssl/etcd-key.pem" #ETCD_PEER_CLIENT_CERT_AUTH="false" ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/etcd-ca.pem" #ETCD_PEER_AUTO_TLS="false" # #[Logging] #ETCD_DEBUG="false" #ETCD_LOG_PACKAGE_LEVELS="" #ETCD_LOG_OUTPUT="default" # #[Unsafe] #ETCD_FORCE_NEW_CLUSTER="false" # #[Version] #ETCD_VERSION="false" #ETCD_AUTO_COMPACTION_RETENTION="0" # #[Profiling] #ETCD_ENABLE_PPROF="false" #ETCD_METRICS="basic" # #[Auth] #ETCD_AUTH_TOKEN="simple" EOF chown -R etcd.etcd /etc/etcd systemctl enable etcd systemctl start etcd systemctl status etcd

etcd2主机安装并启动etcd(192.168.1.13)

yum install -y etcd cat << EOF > /etc/etcd/etcd.conf #[Member] #ETCD_CORS="" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" #ETCD_WAL_DIR="" ETCD_LISTEN_PEER_URLS="https://192.168.1.13:2380" ETCD_LISTEN_CLIENT_URLS="https://127.0.0.1:2379,https://192.168.1.13:2379" #ETCD_MAX_SNAPSHOTS="5" #ETCD_MAX_WALS="5" ETCD_NAME="etcd2" #ETCD_SNAPSHOT_COUNT="100000" #ETCD_HEARTBEAT_INTERVAL="100" #ETCD_ELECTION_TIMEOUT="1000" #ETCD_QUOTA_BACKEND_BYTES="0" #ETCD_MAX_REQUEST_BYTES="1572864" #ETCD_GRPC_KEEPALIVE_MIN_TIME="5s" #ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s" #ETCD_GRPC_KEEPALIVE_TIMEOUT="20s" # #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.13:2380" ETCD_ADVERTISE_CLIENT_URLS="https://127.0.0.1:2379,https://192.168.1.13:2379" #ETCD_DISCOVERY="" #ETCD_DISCOVERY_FALLBACK="proxy" #ETCD_DISCOVERY_PROXY="" #ETCD_DISCOVERY_SRV="" ETCD_INITIAL_CLUSTER="etcd1=https://192.168.1.12:2380,etcd2=https://192.168.1.13:2380,etcd3=https://192.168.1.14:2380" ETCD_INITIAL_CLUSTER_TOKEN="BigBoss" #ETCD_INITIAL_CLUSTER_STATE="new" #ETCD_STRICT_RECONFIG_CHECK="true" #ETCD_ENABLE_V2="true" # #[Proxy] #ETCD_PROXY="off" #ETCD_PROXY_FAILURE_WAIT="5000" #ETCD_PROXY_REFRESH_INTERVAL="30000" #ETCD_PROXY_DIAL_TIMEOUT="1000" #ETCD_PROXY_WRITE_TIMEOUT="5000" #ETCD_PROXY_READ_TIMEOUT="0" # #[Security] ETCD_CERT_FILE="/etc/etcd/ssl/etcd.pem" ETCD_KEY_FILE="/etc/etcd/ssl/etcd-key.pem" #ETCD_CLIENT_CERT_AUTH="false" ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/etcd-ca.pem" #ETCD_AUTO_TLS="false" ETCD_PEER_CERT_FILE="/etc/etcd/ssl/etcd.pem" ETCD_PEER_KEY_FILE="/etc/etcd/ssl/etcd-key.pem" #ETCD_PEER_CLIENT_CERT_AUTH="false" ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/etcd-ca.pem" #ETCD_PEER_AUTO_TLS="false" # #[Logging] #ETCD_DEBUG="false" #ETCD_LOG_PACKAGE_LEVELS="" #ETCD_LOG_OUTPUT="default" # #[Unsafe] #ETCD_FORCE_NEW_CLUSTER="false" # #[Version] #ETCD_VERSION="false" #ETCD_AUTO_COMPACTION_RETENTION="0" # #[Profiling] #ETCD_ENABLE_PPROF="false" #ETCD_METRICS="basic" # #[Auth] #ETCD_AUTH_TOKEN="simple" EOF chown -R etcd.etcd /etc/etcd systemctl enable etcd systemctl start etcd systemctl status etcd

etcd3主机安装并启动etcd(192.168.1.14)

yum install -y etcd cat << EOF > /etc/etcd/etcd.conf #[Member] #ETCD_CORS="" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" #ETCD_WAL_DIR="" ETCD_LISTEN_PEER_URLS="https://192.168.1.14:2380" ETCD_LISTEN_CLIENT_URLS="https://127.0.0.1:2379,https://192.168.1.14:2379" #ETCD_MAX_SNAPSHOTS="5" #ETCD_MAX_WALS="5" ETCD_NAME="etcd3" #ETCD_SNAPSHOT_COUNT="100000" #ETCD_HEARTBEAT_INTERVAL="100" #ETCD_ELECTION_TIMEOUT="1000" #ETCD_QUOTA_BACKEND_BYTES="0" #ETCD_MAX_REQUEST_BYTES="1572864" #ETCD_GRPC_KEEPALIVE_MIN_TIME="5s" #ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s" #ETCD_GRPC_KEEPALIVE_TIMEOUT="20s" # #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.14:2380" ETCD_ADVERTISE_CLIENT_URLS="https://127.0.0.1:2379,https://192.168.1.14:2379" #ETCD_DISCOVERY="" #ETCD_DISCOVERY_FALLBACK="proxy" #ETCD_DISCOVERY_PROXY="" #ETCD_DISCOVERY_SRV="" ETCD_INITIAL_CLUSTER="etcd1=https://192.168.1.12:2380,etcd2=https://192.168.1.13:2380,etcd3=https://192.168.1.14:2380" ETCD_INITIAL_CLUSTER_TOKEN="BigBoss" #ETCD_INITIAL_CLUSTER_STATE="new" #ETCD_STRICT_RECONFIG_CHECK="true" #ETCD_ENABLE_V2="true" # #[Proxy] #ETCD_PROXY="off" #ETCD_PROXY_FAILURE_WAIT="5000" #ETCD_PROXY_REFRESH_INTERVAL="30000" #ETCD_PROXY_DIAL_TIMEOUT="1000" #ETCD_PROXY_WRITE_TIMEOUT="5000" #ETCD_PROXY_READ_TIMEOUT="0" # #[Security] ETCD_CERT_FILE="/etc/etcd/ssl/etcd.pem" ETCD_KEY_FILE="/etc/etcd/ssl/etcd-key.pem" #ETCD_CLIENT_CERT_AUTH="false" ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/etcd-ca.pem" #ETCD_AUTO_TLS="false" ETCD_PEER_CERT_FILE="/etc/etcd/ssl/etcd.pem" ETCD_PEER_KEY_FILE="/etc/etcd/ssl/etcd-key.pem" #ETCD_PEER_CLIENT_CERT_AUTH="false" ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/etcd-ca.pem" #ETCD_PEER_AUTO_TLS="false" # #[Logging] #ETCD_DEBUG="false" #ETCD_LOG_PACKAGE_LEVELS="" #ETCD_LOG_OUTPUT="default" # #[Unsafe] #ETCD_FORCE_NEW_CLUSTER="false" # #[Version] #ETCD_VERSION="false" #ETCD_AUTO_COMPACTION_RETENTION="0" # #[Profiling] #ETCD_ENABLE_PPROF="false" #ETCD_METRICS="basic" # #[Auth] #ETCD_AUTH_TOKEN="simple" EOF chown -R etcd.etcd /etc/etcd systemctl enable etcd systemctl start etcd systemctl status etcd

检查集群状态

#在任意etcd节点执行

etcdctl --endpoints "https://192.168.1.12:2379,https://192.168.1.13:2379,https://192.168.1.14:2379" --ca-file=/etc/etcd/ssl/etcd-ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem cluster-health

member 399b596d3999ba01 is healthy: got healthy result from https://127.0.0.1:2379

member 7b170c49403f28a9 is healthy: got healthy result from https://127.0.0.1:2379

member be31245426ce45a9 is healthy: got healthy result from https://127.0.0.1:2379

cluster is healthy

etcdctl --endpoints "https://192.168.1.12:2379,https://192.168.1.13:2379,https://192.168.1.14:2379" --ca-file=/etc/etcd/ssl/etcd-ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem member list

399b596d3999ba01: name=etcd2 peerURLs=https://192.168.1.14:2380 clientURLs=https://127.0.0.1:2379,https://192.168.1.14:2379 isLeader=true

7b170c49403f28a9: name=etcd0 peerURLs=https://192.168.1.12:2380 clientURLs=https://127.0.0.1:2379,https://192.168.1.12:2379 isLeader=false

be31245426ce45a9: name=etcd1 peerURLs=https://192.168.1.13:2380 clientURLs=https://127.0.0.1:2379,https://192.168.1.13:2379 isLeader=false

三、准备kubernetes的证书

在master节点操作

创建相关目录 mkdir $HOME/ssl && cd $HOME/ssl

配置 root ca

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HangZhou",

"L": "ZheJiang",

"O": "k8s",

"OU": "System"

}

],

"ca": {

"expiry": "87600h"

}

}

EOF

生成root ca

cfssl gencert -initca ca-csr.json | cfssljson -bare ca ls -l ca*.pem -rw-r--r-- 1 root root 1679 Nov 24 17:07 ca-key.pem -rw-r--r-- 1 root root 1363 Nov 24 17:07 ca.pem

配置kube-apiserver证书

注意: 如需自定义集群域名, 修改cluster | cluster.local 字段为自定义域名

"kubernetes.default.svc.ziji",

"kubernetes.default.svc.ziji.work"

#10.96.0.1 是 kube-apiserver 指定的 service-cluster-ip-range 网段的第一个IP

cat > kube-apiserver-csr.json << EOF

{

"CN": "kube-apiserver",

"hosts": [

"127.0.0.1",

"192.168.1.12",

"192.168.1.13",

"192.168.1.14",

"192.168.1.15",

"192.168.1.16",

"192.168.1.17",

"192.168.1.18",

"192.168.1.19",

"10.96.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HangZhou",

"L": "ZheJiang",

"O": "k8s",

"OU": "System"

}

]

}

EOF

生成kube-apiserver证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver ls -l kube-apiserver*.pem -rw-r--r-- 1 root root 1679 Nov 24 17:16 kube-apiserver-key.pem -rw-r--r-- 1 root root 1716 Nov 24 17:16 kube-apiserver.pem

配置 kube-controller-manager证书

这里需要添加master和node节点ip

cat > kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [

"127.0.0.1",

"192.168.1.16",

"192.168.1.17",

"192.168.1.18",

"192.168.1.19"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HangZhou",

"L": "ZheJiang",

"O": "system:kube-controller-manager",

"OU": "System"

}

]

}

EOF

生成kube-controller-manager证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager ls -l kube-controller-manager*.pem -rw-r--r-- 1 root root 1675 Nov 24 17:12 kube-controller-manager-key.pem -rw-r--r-- 1 root root 1562 Nov 24 17:12 kube-controller-manager.pem

配置kube-scheduler证书

cat > kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.1.16",

"192.168.1.17",

"192.168.1.18",

"192.168.1.19"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HangZhou",

"L": "ZheJiang",

"O": "system:kube-scheduler",

"OU": "System"

}

]

}

EOF

生成kube-scheduler证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler ls -l kube-scheduler*.pem -rw-r--r-- 1 root root 1675 Nov 24 17:13 kube-scheduler-key.pem -rw-r--r-- 1 root root 1537 Nov 24 17:13 kube-scheduler.pem

配置 kube-proxy 证书

cat > kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HangZhou",

"L": "ZheJiang",

"O": "system:kube-proxy",

"OU": "System"

}

]

}

EOF

生成 kube-proxy 证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy ls -l kube-proxy*.pem -rw-r--r-- 1 root root 1679 Nov 24 17:14 kube-proxy-key.pem -rw-r--r-- 1 root root 1428 Nov 24 17:14 kube-proxy.pem

配置 admin 证书

cat > admin-csr.json << EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HangZhou",

"L": "ZheJiang",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

生成 admin 证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin ls -l admin*.pem -rw-r--r-- 1 root root 1679 Nov 24 17:15 admin-key.pem -rw-r--r-- 1 root root 1407 Nov 24 17:15 admin.pem

复制生成的证书文件,并分发至其他节点

mkdir -pv /etc/kubernetes/pki cp ca*.pem admin*.pem kube-proxy*.pem kube-scheduler*.pem kube-controller-manager*.pem kube-apiserver*.pem /etc/kubernetes/pki scp -r /etc/kubernetes 192.168.1.17:/etc/ scp -r /etc/kubernetes 192.168.1.18:/etc/ scp -r /etc/kubernetes 192.168.1.19:/etc/ 注意: node节点只需要ca、kube-proxy、admin、 kebelet、 kube-apiserver证书, 不需要拷贝kube-controller-manager kube-scheduler证书

四、开始安装master

下载解压server包并配置环境变量

cd /root

wget https://dl.k8s.io/v1.11.4/kubernetes-server-linux-amd64.tar.gz

tar -xf kubernetes-server-linux-amd64.tar.gz -C /usr/local

mv /usr/local/kubernetes /usr/local/kubernetes-v1.11.4

ln -s kubernetes-v1.11.4 /usr/local/kubernetes

# 设置环境变量, 配置docker命令补全

yum install bash-completion -y

cat > /etc/profile.d/kubernetes.sh << EOF

k8s_home=/usr/local/kubernetes

export PATH=\$k8s_home/server/bin:\$PATH

source <(kubectl completion bash)

EOF

source /etc/profile.d/kubernetes.sh

kubectl version

Client Version: version.Info{Major:"1", Minor:"11", GitVersion:"v1.11.4", GitCommit:"bf9a868e8ea3d3a8fa53cbb22f566771b3f8068b", GitTreeState:"clean", BuildDate:"2018-10-25T19:17:06Z", GoVersion:"go1.10.3", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"11", GitVersion:"v1.11.4", GitCommit:"bf9a868e8ea3d3a8fa53cbb22f566771b3f8068b", GitTreeState:"clean", BuildDate:"2018-10-25T19:06:30Z", GoVersion:"go1.10.3", Compiler:"gc", Platform:"linux/amd64"}

生成kubeconfig

配置 TLS Bootstrapping

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

cat > /etc/kubernetes/token.csv << EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

创建 kubelet bootstrapping kubeconfig

# 设置kube-apiserver访问地址, 后面需要对kube-apiserver配置高可用集群, 这里设置apiserver浮动IP

export KUBE_APISERVER="https://192.168.1.15:8443"

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubelet-bootstrap.conf

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=kubelet-bootstrap.conf

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=kubelet-bootstrap.conf

kubectl config use-context default --kubeconfig=kubelet-bootstrap.conf

创建 kube-controller-manager kubeconfig

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-controller-manager.conf

kubectl config set-credentials kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/kube-controller-manager.pem \

--client-key=/etc/kubernetes/pki/kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.conf

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-controller-manager \

--kubeconfig=kube-controller-manager.conf

kubectl config use-context default --kubeconfig=kube-controller-manager.conf

创建 kube-scheduler kubeconfig

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-scheduler.conf

kubectl config set-credentials kube-scheduler \

--client-certificate=/etc/kubernetes/pki/kube-scheduler.pem \

--client-key=/etc/kubernetes/pki/kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.conf

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-scheduler \

--kubeconfig=kube-scheduler.conf

kubectl config use-context default --kubeconfig=kube-scheduler.conf

创建 kube-proxy kubeconfig

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.conf

kubectl config set-credentials kube-proxy \

--client-certificate=/etc/kubernetes/pki/kube-proxy.pem \

--client-key=/etc/kubernetes/pki/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.conf

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.conf

kubectl config use-context default --kubeconfig=kube-proxy.conf

创建 admin kubeconfig

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=admin.conf

kubectl config set-credentials admin \

--client-certificate=/etc/kubernetes/pki/admin.pem \

--client-key=/etc/kubernetes/pki/admin-key.pem \

--embed-certs=true \

--kubeconfig=admin.conf

kubectl config set-context default \

--cluster=kubernetes \

--user=admin \

--kubeconfig=admin.conf

kubectl config use-context default --kubeconfig=admin.conf

把 kube-proxy.conf 复制到其他节点

scp kubelet-bootstrap.conf kube-proxy.conf 192.168.1.17:/etc/kubernetes scp kubelet-bootstrap.conf kube-proxy.conf 192.168.1.18:/etc/kubernetes scp kubelet-bootstrap.conf kube-proxy.conf 192.168.1.19:/etc/kubernetes cd $HOME

配置启动kube-apiserver

在192.168.1.12、192.168.1.13、192.168.1.14 创建etcd目录 复制 etcd ca mkdir -pv /etc/kubernetes/pki/etcd cd /etc/etcd/ssl cp etcd-ca.pem etcd-key.pem etcd.pem /etc/kubernetes/pki/etcd

生成 service account key (master节点操作)

# 分发apiserver二进制文件和证书文件到apiserver节点 openssl genrsa -out /etc/kubernetes/pki/sa.key 2048 openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub ls -l /etc/kubernetes/pki/sa.* -rw-r--r-- 1 root root 1675 Nov 24 17:27 /etc/kubernetes/pki/sa.key -rw-r--r-- 1 root root 451 Nov 24 17:27 /etc/kubernetes/pki/sa.pub scp -r /etc/kubernetes/pki/sa.* 192.168.1.12:/etc/kubernetes/pki/ scp -r /etc/kubernetes/pki/sa.* 192.168.1.13:/etc/kubernetes/pki/ scp -r /etc/kubernetes/pki/sa.* 192.168.1.14:/etc/kubernetes/pki/ scp -r /usr/local/kubernetes/server/bin/apiserver 192.168.1.12:/usr/local/kubernetes/server/bin/apiserver scp -r /usr/local/kubernetes/server/bin/apiserver 192.168.1.13:/usr/local/kubernetes/server/bin/apiserver scp -r /usr/local/kubernetes/server/bin/apiserver 192.168.1.14:/usr/local/kubernetes/server/bin/apiserver cd $HOME

分别在192.168.1.12/192.168.1.13/192.168.1.14创建apiserver启动文件

cat > /etc/systemd/system/kube-apiserver.service << EOF [Unit] Description=Kubernetes API Service Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/apiserver ExecStart=/usr/local/kubernetes/server/bin/kube-apiserver \\ \$KUBE_LOGTOSTDERR \\ \$KUBE_LOG_LEVEL \\ \$KUBE_ETCD_ARGS \\ \$KUBE_API_ADDRESS \\ \$KUBE_SERVICE_ADDRESSES \\ \$KUBE_ADMISSION_CONTROL \\ \$KUBE_APISERVER_ARGS Restart=on-failure Type=notify LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

该配置文件同时被 kube-apiserver, kube-controller-manager, kube-scheduler, kubelet, kube-proxy 使用

192.168.1.12节点

cat > /etc/kubernetes/config << EOF KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" EOF cat > /etc/kubernetes/apiserver << EOF KUBE_API_ADDRESS="--advertise-address=192.168.1.12" KUBE_ETCD_ARGS="--etcd-servers=https://192.168.1.12:2379,https://192.168.1.13:2379,https://192.168.1.13:2379 --etcd-cafile=/etc/kubernetes/pki/etcd/etcd-ca.pem --etcd-certfile=/etc/kubernetes/pki/etcd/etcd.pem --etcd-keyfile=/etc/kubernetes/pki/etcd/etcd-key.pem" KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.96.0.0/16" KUBE_ADMISSION_CONTROL="--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota" KUBE_APISERVER_ARGS="--allow-privileged=true --authorization-mode=Node,RBAC --enable-bootstrap-token-auth=true --token-auth-file=/etc/kubernetes/token.csv --service-node-port-range=30000-32767 --tls-cert-file=/etc/kubernetes/pki/kube-apiserver.pem --tls-private-key-file=/etc/kubernetes/pki/kube-apiserver-key.pem --client-ca-file=/etc/kubernetes/pki/ca.pem --service-account-key-file=/etc/kubernetes/pki/sa.pub --enable-swagger-ui=true --secure-port=6443 --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --anonymous-auth=false --kubelet-client-certificate=/etc/kubernetes/pki/admin.pem --kubelet-client-key=/etc/kubernetes/pki/admin-key.pem" EOF

192.168.1.13节点

cat > /etc/kubernetes/config << EOF KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" EOF cat > /etc/kubernetes/apiserver << EOF KUBE_API_ADDRESS="--advertise-address=192.168.1.13" KUBE_ETCD_ARGS="--etcd-servers=https://192.168.1.12:2379,https://192.168.1.13:2379,https://192.168.1.13:2379 --etcd-cafile=/etc/kubernetes/pki/etcd/etcd-ca.pem --etcd-certfile=/etc/kubernetes/pki/etcd/etcd.pem --etcd-keyfile=/etc/kubernetes/pki/etcd/etcd-key.pem" KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.96.0.0/16" KUBE_ADMISSION_CONTROL="--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota" KUBE_APISERVER_ARGS="--allow-privileged=true --authorization-mode=Node,RBAC --enable-bootstrap-token-auth=true --token-auth-file=/etc/kubernetes/token.csv --service-node-port-range=30000-32767 --tls-cert-file=/etc/kubernetes/pki/kube-apiserver.pem --tls-private-key-file=/etc/kubernetes/pki/kube-apiserver-key.pem --client-ca-file=/etc/kubernetes/pki/ca.pem --service-account-key-file=/etc/kubernetes/pki/sa.pub --enable-swagger-ui=true --secure-port=6443 --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --anonymous-auth=false --kubelet-client-certificate=/etc/kubernetes/pki/admin.pem --kubelet-client-key=/etc/kubernetes/pki/admin-key.pem" EOF

192.168.1.14节点

cat > /etc/kubernetes/config << EOF KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" EOF cat > /etc/kubernetes/apiserver << EOF KUBE_API_ADDRESS="--advertise-address=192.168.1.14" KUBE_ETCD_ARGS="--etcd-servers=https://192.168.1.12:2379,https://192.168.1.13:2379,https://192.168.1.13:2379 --etcd-cafile=/etc/kubernetes/pki/etcd/etcd-ca.pem --etcd-certfile=/etc/kubernetes/pki/etcd/etcd.pem --etcd-keyfile=/etc/kubernetes/pki/etcd/etcd-key.pem" KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.96.0.0/16" KUBE_ADMISSION_CONTROL="--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota" KUBE_APISERVER_ARGS="--allow-privileged=true --authorization-mode=Node,RBAC --enable-bootstrap-token-auth=true --token-auth-file=/etc/kubernetes/token.csv --service-node-port-range=30000-32767 --tls-cert-file=/etc/kubernetes/pki/kube-apiserver.pem --tls-private-key-file=/etc/kubernetes/pki/kube-apiserver-key.pem --client-ca-file=/etc/kubernetes/pki/ca.pem --service-account-key-file=/etc/kubernetes/pki/sa.pub --enable-swagger-ui=true --secure-port=6443 --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --anonymous-auth=false --kubelet-client-certificate=/etc/kubernetes/pki/admin.pem --kubelet-client-key=/etc/kubernetes/pki/admin-key.pem"

启动kube-apiserver 服务

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl start kube-apiserver

systemctl status kube-apiserver

● kube-apiserver.service - Kubernetes API Service

Loaded: loaded (/etc/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-11-28 15:33:06 CST; 1 day 4h ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 947 (kube-apiserver)

CGroup: /system.slice/kube-apiserver.service

└─947 /data/apps/kubernetes/server/bin/kube-apiserver --logtostderr=true --v=0 --etcd-servers=https://192.168.1.12:2379,https://192.168.1.13:2379,https://192.168.1.14:2379 --etcd-cafile=/etc/kubernetes/pki/etcd/e...

访问测试

curl -k https://192.168.1.12:6443/

出现一下内容说明搭建成功:

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "Unauthorized",

"reason": "Unauthorized",

"code": 401

}

配置apiserver高可用部署

安装相关软件包

192.168.1.12 apiserver-0 192.168.1.13 apiserver-1 192.168.1.14 apiserver-2 分别在apiserver集群节点安装haproxy、keepalived yum install haproxy keepalived -y

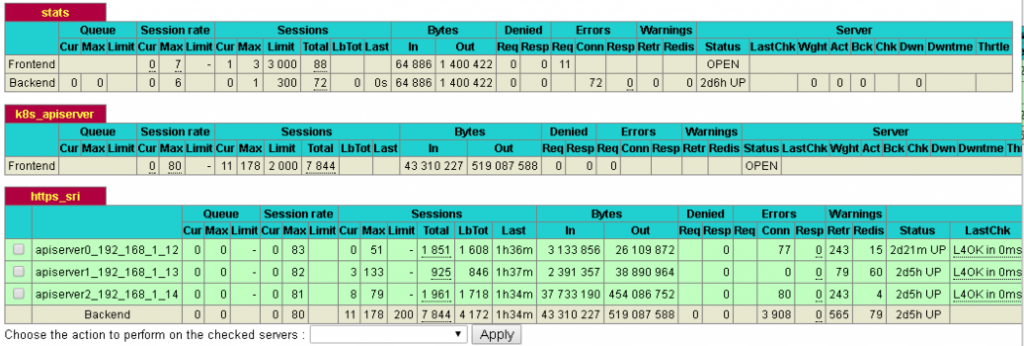

配置haproxy

global log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon stats socket /var/lib/haproxy/stats defaults mode tcp log global option tcplog option dontlognull option redispatch retries 3 timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout check 10s maxconn 3000 listen stats mode http bind :10086 stats enable stats uri /admin?stats stats auth admin:admin stats admin if TRUE frontend k8s_apiserver *:8443 mode tcp maxconn 2000 default_backend https_sri backend https_sri balance roundrobin server apiserver0_192_168_1_12 192.168.1.12:6443 check inter 2000 fall 2 rise 2 weight 1 server apiserver1_192_168_1_13 192.168.1.13:6443 check inter 2000 fall 2 rise 2 weight 1 server apiserver2_192_168_1_14 192.168.1.14:6443 check inter 2000 fall 2 rise 2 weight 1

配置keepalived

! Configuration File for keepalived

global_defs {

notification_email {

test@test.com

}

notification_email_from admin@test.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_MASTER_APISERVER

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

}

vrrp_instance VI_1 {

state MASTER #主节点为MASTER, 其余两节点设置为BACKUP

interface ens192 #网卡接口名

virtual_router_id 60

priority 100 #备节点优先级不能大于100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.15/24 #VIP

}

track_script {

check_haproxy

}

}

配置检查脚本

vi /etc/keepalived/check_haproxy.sh # 写入以下内容, 赋予脚本执行权限 #!/bin/bash flag=$(systemctl status haproxy &> /dev/null;echo $?) if [[ $flag != 0 ]];then echo "haproxy is down,close the keepalived" systemctl stop keepalived fi

修改系统服务

vi /usr/lib/systemd/system/keepalived.service [Unit] Description=LVS and VRRP High Availability Monitor After=syslog.target network-online.target Requires=haproxy.service #增加该字段 [Service] Type=forking PIDFile=/var/run/keepalived.pid KillMode=process EnvironmentFile=-/etc/sysconfig/keepalived ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS ExecReload=/bin/kill -HUP $MAINPID [Install] WantedBy=multi-user.target

firewalld放行VRRP协议

firewall-cmd --direct --permanent --add-rule ipv4 filter INPUT 0 --in-interface ens192 --destination 192.168.1.0/24 --protocol vrrp -j ACCEPT firewall-cmd --reload

登录apiserver节点启动以下服务

[root@ziji-etcd0-apiserver-192-168-1-12 ~]# systemctl enable haproxy [root@ziji-etcd0-apiserver-192-168-1-12 ~]# systemctl start haproxy [root@ziji-etcd0-apiserver-192-168-1-12 ~]# systemctl status haproxy ● haproxy.service - HAProxy Load Balancer Loaded: loaded (/usr/lib/systemd/system/haproxy.service; enabled; vendor preset: disabled) Active: active (running) since Wed 2018-11-28 09:49:03 CST; 2 days ago Main PID: 77138 (haproxy-systemd) CGroup: /system.slice/haproxy.service ├─77138 /usr/sbin/haproxy-systemd-wrapper -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid ├─77139 /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds └─77140 /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds Warning: Journal has been rotated since unit was started. Log output is incomplete or unavailable.

[root@ziji-etcd0-apiserver-192-168-1-12 ~]# systemctl enable keepalived

[root@ziji-etcd0-apiserver-192-168-1-12 ~]# systemctl start keepalived

[root@ziji-etcd0-apiserver-192-168-1-12 ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-11-28 09:49:04 CST; 2 days ago

Main PID: 77143 (keepalived)

CGroup: /system.slice/keepalived.service

├─77143 /usr/sbin/keepalived -D

├─77144 /usr/sbin/keepalived -D

└─77145 /usr/sbin/keepalived -D

Warning: Journal has been rotated since unit was started. Log output is incomplete or unavailable.

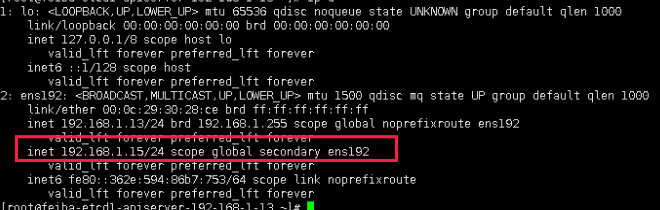

执行ip a命令, 查看浮动IP

http://192.168.1.15:10086/admin?stats 登录haproxy,查看服务是否正常

配置启动kube-controller-manager

启动文件- (master节点)

cat > /etc/systemd/system/kube-controller-manager.service << EOF Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/controller-manager ExecStart=/usr/local/kubernetes/server/bin/kube-controller-manager \\ \$KUBE_LOGTOSTDERR \\ \$KUBE_LOG_LEVEL \\ \$KUBECONFIG \\ \$KUBE_CONTROLLER_MANAGER_ARGS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

配置文件

cat >/etc/kubernetes/controller-manager<<EOF KUBECONFIG="--kubeconfig=/etc/kubernetes/kube-controller-manager.conf" KUBE_CONTROLLER_MANAGER_ARGS="--address=127.0.0.1 --cluster-cidr=10.96.0.0/16 --cluster-name=kubernetes --cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem --cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem --service-account-private-key-file=/etc/kubernetes/pki/sa.key --root-ca-file=/etc/kubernetes/pki/ca.pem --leader-elect=true --use-service-account-credentials=true --node-monitor-grace-period=10s --pod-eviction-timeout=10s --allocate-node-cidrs=true --controllers=*,bootstrapsigner,tokencleaner" EOF

启动

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl start kube-controller-manager

systemctl status kube-controller-manager

● kube-controller-manager.service

Loaded: loaded (/etc/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-11-28 13:53:00 CST; 1 day 6h ago

Main PID: 655 (kube-controller)

Tasks: 9

Memory: 97.6M

CGroup: /system.slice/kube-controller-manager.service

└─655 /usr/local/kubernetes/server/bin/kube-controller-manager --logtostderr=true --v=0 --kubeconfig=/etc/kubernetes/kube-controller-manager.conf --address=127.0.0.1 --cluster-...

配置启动kube-scheduler

systemctl启动文件

cat > /etc/systemd/system/kube-scheduler.service << EOF [Unit] Description=Kubernetes Scheduler Plugin Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/scheduler ExecStart=/usr/local/kubernetes/server/bin/kube-scheduler \\ \$KUBE_LOGTOSTDERR \\ \$KUBE_LOG_LEVEL \\ \$KUBECONFIG \\ \$KUBE_SCHEDULER_ARGS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

配置文件

cat > /etc/kubernetes/scheduler << EOF KUBECONFIG="--kubeconfig=/etc/kubernetes/kube-scheduler.conf" KUBE_SCHEDULER_ARGS="--leader-elect=true --address=127.0.0.1" EOF

启动

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl start kube-scheduler

systemctl status kube-scheduler

● kube-scheduler.service - Kubernetes Scheduler Plugin

Loaded: loaded (/etc/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-11-28 13:53:00 CST; 1 day 6h ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 645 (kube-scheduler)

Tasks: 11

Memory: 29.2M

CGroup: /system.slice/kube-scheduler.service

└─645 /usr/local/kubernetes/server/bin/kube-scheduler --logtostderr=true --v=0 --kubeconfig=/etc/kubernetes/kube-scheduler.conf --leader-elect=true --address=127.0.0.1

配置kubectl

rm -rf $HOME/.kube mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config kubectl get node

查看各个组件的状态

kubectl get componentstatuses

[root@master ~]# kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-1 Healthy {"health": "true"}

etcd-0 Healthy {"health": "true"}

etcd-2 Healthy {"health": "true"}

配置kubelet使用bootstrap

kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap

五、配置cni(flanneld)和kubelet

配置启动kubelet(master与node节点都需要安装)

#配置启动文件 cat > /etc/systemd/system/kubelet.service << EOF [Unit] Description=Kubernetes Kubelet Server Documentation=https://github.com/kubernetes/kubernetes After=docker.service Requires=docker.service [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/kubelet ExecStart=/usr/local/kubernetes/server/bin/kubelet \\ \$KUBE_LOGTOSTDERR \\ \$KUBE_LOG_LEVEL \\ \$KUBELET_CONFIG \\ \$KUBELET_HOSTNAME \\ \$KUBELET_POD_INFRA_CONTAINER \\ \$KUBELET_ARGS Restart=on-failure [Install] WantedBy=multi-user.target EOF cat > /etc/kubernetes/config << EOF KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" EOF cat > /etc/kubernetes/kubelet << EOF KUBELET_HOSTNAME="--hostname-override=192.168.1.16" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1" KUBELET_CONFIG="--config=/etc/kubernetes/kubelet-config.yml" KUBELET_ARGS="--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.conf --kubeconfig=/etc/kubernetes/kubelet.conf --cert-dir=/etc/kubernetes/pki" EOF cat > /etc/kubernetes/kubelet-config.yml << EOF kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 192.168.1.16 port: 10250 cgroupDriver: cgroupfs clusterDNS: - 10.96.0.114 clusterDomain: cluster.local. hairpinMode: promiscuous-bridge serializeImagePulls: false authentication: x509: clientCAFile: /etc/kubernetes/pki/ca.pem anonymous: enbaled: false webhook: enbaled: false EOF

启动kubelet

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

systemctl status kubelet

● kubelet.service - Kubernetes Kubelet Server

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-11-28 13:53:11 CST; 1 day 7h ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 1810 (kubelet)

Tasks: 15

Memory: 107.4M

CGroup: /system.slice/kubelet.service

└─1810 /usr/local/kubernetes/server/bin/kubelet --logtostderr=true --v=0 --config=/etc/kubernetes/kubelet-conf...

在node节点配置kubelet组件

node1节点 192.168.1.17 cd /root wget https://dl.k8s.io/v1.11.4/kubernetes-node-linux-amd64.tar.gz tar -xf kubernetes-node-linux-amd64.tar.gz -C /usr/local/ mv /usr/local/kubernetes /usr/local/kubernetes-v1.11.4 ln -s kubernetes-v1.11.4 /usr/local/kubernetes

配置启动kubelet

#配置systemctl启动文件 cat > /etc/systemd/system/kubelet.service << EOF [Unit] Description=Kubernetes Kubelet Server Documentation=https://github.com/kubernetes/kubernetes After=docker.service Requires=docker.service [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/kubelet ExecStart=/usr/local/kubernetes/node/bin/kubelet \\ \$KUBE_LOGTOSTDERR \\ \$KUBE_LOG_LEVEL \\ \$KUBELET_CONFIG \\ \$KUBELET_HOSTNAME \\ \$KUBELET_POD_INFRA_CONTAINER \\ \$KUBELET_ARGS Restart=on-failure [Install] WantedBy=multi-user.target EOF cat > /etc/kubernetes/config << EOF KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" EOF cat > /etc/kubernetes/kubelet << EOF KUBELET_HOSTNAME="--hostname-override=192.168.1.17" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1" KUBELET_CONFIG="--config=/etc/kubernetes/kubelet-config.yml" KUBELET_ARGS="--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.conf --kubeconfig=/etc/kubernetes/kubelet.conf --cert-dir=/etc/kubernetes/pki " EOF cat > /etc/kubernetes/kubelet-config.yml << EOF kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 192.168.1.17 port: 10250 cgroupDriver: cgroupfs clusterDNS: - 10.96.0.114 clusterDomain: cluster.local. hairpinMode: promiscuous-bridge serializeImagePulls: false authentication: x509: clientCAFile: /etc/kubernetes/pki/ca.pem anonymous: enbaled: false webhook: enbaled: false EOF

node1启动kubelet

systemctl daemon-reload systemctl enable kubelet systemctl restart kubelet systemctl status kubelet

在node2上操作

node2 节点 192.168.1.18 cd /root wget https://dl.k8s.io/v1.11.4/kubernetes-node-linux-amd64.tar.gz tar -xf kubernetes-node-linux-amd64.tar.gz -C /usr/local/ mv /usr/local/kubernetes /usr/local/kubernetes-v1.11.4 ln -s kubernetes-v1.11.4 /usr/local/kubernetes

配置启动kubelet

#配置systemctl启动文件 cat > /etc/systemd/system/kubelet.service << EOF [Unit] Description=Kubernetes Kubelet Server Documentation=https://github.com/kubernetes/kubernetes After=docker.service Requires=docker.service [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/kubelet ExecStart=/usr/local/kubernetes/node/bin/kubelet \\ \$KUBE_LOGTOSTDERR \\ \$KUBE_LOG_LEVEL \\ \$KUBELET_CONFIG \\ \$KUBELET_HOSTNAME \\ \$KUBELET_POD_INFRA_CONTAINER \\ \$KUBELET_ARGS Restart=on-failure [Install] WantedBy=multi-user.target EOF cat > /etc/kubernetes/config << EOF KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" EOF cat > /etc/kubernetes/kubelet << EOF KUBELET_HOSTNAME="--hostname-override=192.168.1.18" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1" KUBELET_CONFIG="--config=/etc/kubernetes/kubelet-config.yml" KUBELET_ARGS="--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.conf --kubeconfig=/etc/kubernetes/kubelet.conf --cert-dir=/etc/kubernetes/pki " EOF cat > /etc/kubernetes/kubelet-config.yml << EOF kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 192.168.1.18 port: 10250 cgroupDriver: cgroupfs clusterDNS: - 10.96.0.114 clusterDomain: cluster.local. hairpinMode: promiscuous-bridge serializeImagePulls: false authentication: x509: clientCAFile: /etc/kubernetes/pki/ca.pem anonymous: enbaled: false webhook: enbaled: false EOF

启动kubelet

systemctl daemon-reload systemctl enable kubelet systemctl restart kubelet systemctl status kubelet

在node3上操作

重复以上步骤, 修改ip地址为192.168.1.19

通过证书验证添加各个节点

#在master节点操作

kubectl get csr

#通过验证并添加进集群

kubectl get csr | awk '/node/{print $1}' | xargs kubectl certificate approve

###单独执行命令例子:

kubectl certificate approve node-csr-Yiiv675wUCvQl3HH11jDr0cC9p3kbrXWrxvG3EjWGoE

#查看节点

#此时节点状态为 NotReady,因为还没有配置网络

kubectl get nodes

[root@master ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.1.16 NotReady <none> 6s v1.11.4

192.168.1.17 NotReady <none> 7s v1.11.4

192.168.1.18 NotReady <none> 7s v1.11.4

192.168.1.19 NotReady <none> 7s v1.11.4

# 在node节点查看生成的文件

ls -l /etc/kubernetes/kubelet.conf

ls -l /etc/kubernetes/pki/kubelet*

六、配置kube-proxy

所有节点都要配置kube-proxy!!!

master节点操作

安装conntrack-tools yum install -y conntrack-tools

启动文件

cat > /etc/systemd/system/kube-proxy.service << EOF [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/proxy ExecStart=/usr/local/kubernetes/server/bin/kube-proxy \\ \$KUBE_LOGTOSTDERR \\ \$KUBE_LOG_LEVEL \\ \$KUBECONFIG \\ \$KUBE_PROXY_ARGS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF #启用ipvs主要就是把kube-proxy的--proxy-mode配置选项修改为ipvs #并且要启用--masquerade-all,使用iptables辅助ipvs运行 cat > /etc/kubernetes/proxy << EOF KUBECONFIG="--kubeconfig=/etc/kubernetes/kube-proxy.conf" KUBE_PROXY_ARGS="--proxy-mode=ipvs --masquerade-all=true --cluster-cidr=10.96.0.0/16" EOF

启动

systemctl daemon-reload systemctl enable kube-proxy systemctl restart kube-proxy systemctl status kube-proxy

在所有的node上操作

安装

yum install -y conntrack-tools

启动文件

cat > /etc/systemd/system/kube-proxy.service << EOF [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/proxy ExecStart=/usr/local/kubernetes/node/bin/kube-proxy \\ \$KUBE_LOGTOSTDERR \\ \$KUBE_LOG_LEVEL \\ \$KUBECONFIG \\ \$KUBE_PROXY_ARGS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF #启用ipvs主要就是把kube-proxy的--proxy-mode配置选项修改为ipvs #并且要启用--masquerade-all,使用iptables辅助ipvs运行 cat > /etc/kubernetes/proxy << EOF KUBECONFIG="--kubeconfig=/etc/kubernetes/kube-proxy.conf" KUBE_PROXY_ARGS="--proxy-mode=ipvs --masquerade-all=true --cluster-cidr=10.96.0.0/16" EOF 启动 systemctl daemon-reload systemctl enable kube-proxy systemctl restart kube-proxy systemctl status kube-proxy

七、设置集群角色

在master节点操作 设置 192.168.1.16 为 master kubectl label nodes 192.168.1.16 node-role.kubernetes.io/master= 设置 192.168.1.17 - 19 lable为 node kubectl label nodes 192.168.1.17 node-role.kubernetes.io/node= kubectl label nodes 192.168.1.18 node-role.kubernetes.io/node= kubectl label nodes 192.168.1.19 node-role.kubernetes.io/node= 设置 master 一般情况下不接受负载 kubectl taint nodes 192.168.1.16 node-role.kubernetes.io/master=true:NoSchedule

如何删除lable ?–overwrite

kubectl label nodes 192.168.1.231 node-role.kubernetes.io/node- --overwrite

查看节点

#此时节点状态为 NotReady #ROLES已经标识出了master和node kubectl get node NAME STATUS ROLES AGE VERSION 192.168.1.16 NotReady master 1m v1.11.4 192.168.1.17 NotReady node 1m v1.11.4 192.168.1.18 NotReady node 1m v1.11.4 192.168.1.19 NotReady node 1m v1.11.4

八、配置网络

以下网络二选一:

使用flannel网络

cd /root/ wget https://soft.8090st.com/kubernetes/cni/flannel-v0.9.1-linux-amd64.tar.gz tar zxvf flannel-v0.9.1-linux-amd64.tar.gz mv flanneld mk-docker-opts.sh /usr/local/kubernetes/node/bin/ chmod +x /usr/local/kubernetes/node/bin/*

创建flanneld.conf配置文件

cat > /etc/kubernetes/flanneld.conf<< EOF FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.1.12:2379,https://192.168.1.13:2379,https://192.168.1.14:2379 -etcd-cafile=/etc/etcd/ssl/etcd-ca.pem -etcd-certfile=/etc/etcd/ssl/etcd.pem -etcd-keyfile=/etc/etcd/ssl/etcd-key.pem" EOF

创建系统服务

cat > /usr/lib/systemd/system/flanneld.service << EOF [Unit] Description=Flanneld overlay address etcd agent After=network-online.target network.target Before=docker.service [Service] Type=notify EnvironmentFile=/etc/kubernetes/flanneld.conf ExecStart=/usr/local/kubernetes/node/bin/flanneld --ip-masq $FLANNEL_OPTIONS ExecStartPost=/usr/local/kubernetes/node/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env Restart=on-failure [Install] WantedBy=multi-user.target

创建网络段

#在etcd集群执行如下命令, 为docker创建互联网段

etcdctl \

--ca-file=/etc/etcd/ssl/etcd-ca.pem --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://192.168.1.12:2379,https://192.168.1.13:2379,https://192.168.1.14:2379" \

set /coreos.com/network/config '{ "Network": "10.96.0.0/16", "Backend": {"Type": "vxlan"}}'

修改docker.service启动文件

添加子网配置文件. –graph表示修改docker存放路径

vi /usr/lib/systemd/system/docker.service # --graph表示修改docker默认/var/lib/docker存储路径为/data/docker , 需提前创建目录 EnvironmentFile=/run/flannel/subnet.env ExecStart=/usr/bin/dockerd --graph=/data/docker -H unix:// $DOCKER_NETWORK_OPTIONS $DOCKER_DNS_OPTIONS

修改docker服务启动文件,注入dns参数

dns根据实际部署的dns服务来填写 [root@k8s-node1 ~]# vi /usr/lib/systemd/system/docker.service.d/docker-dns.conf [Service] Environment="DOCKER_DNS_OPTIONS=\ --dns 10.96.0.114 --dns 114.114.114.114 \ --dns-search default.svc.ziji.work --dns-search svc.ziji.work \ --dns-opt ndots:2 --dns-opt timeout:2 --dns-opt attempts:2"

启动flanneld

systemctl daemon-reload systemctl restart docker systemctl start flanneld systemctl status flanneld

查看各个节点是否为Ready状态

kubectl get node [root@master ~]# NAME STATUS ROLES AGE VERSION 192.168.1.16 Ready master 5h v1.11.4 192.168.1.17 Ready node 5h v1.11.4 192.168.1.18 Ready node 5h v1.11.4 192.168.1.19 Ready node 5h v1.11.4

九、配置使用coredns

#10.96.0.114 是kubelet中配置的dns #安装coredns cd /root && mkdir coredns && cd coredns wget https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/coredns.yaml.sed wget https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/deploy.sh chmod +x deploy.sh ./deploy.sh -i 10.96.0.114 > coredns.yml kubectl apply -f coredns.yml #查看 kubectl get svc,pods -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kube-dns ClusterIP 10.96.0.114 53/UDP,53/TCP 2d service/kubelet ClusterIP None 10250/TCP 4d NAME READY STATUS RESTARTS AGE pod/coredns-5579c78574-dgrsp 1/1 Running 1 2d pod/coredns-5579c78574-ss4xb 1/1 Running 1 2d pod/coredns-5579c78574-v4bw9 1/1 Running 0 1d

十、测试

创建一个nginx 应用,测试应用和dns是否正常

cd /root && mkdir nginx && cd nginx cat << EOF > nginx.yaml --- apiVersion: v1 kind: Service metadata: name: nginx spec: selector: app: nginx type: NodePort ports: - port: 80 nodePort: 31000 name: nginx-port targetPort: 80 protocol: TCP --- apiVersion: apps/v1 kind: Deployment metadata: name: nginx spec: replicas: 2 selector: matchLabels: app: nginx template: metadata: name: nginx labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80 EOF kubectl apply -f nginx.yaml

创建一个pod用来测试dns

kubectl run curl --image=radial/busyboxplus:curl -i --tty

nslookup kubernetes

nslookup nginx

curl nginx

exit

kubectl delete deployment curl

[ root@curl-87b54756-qf7l9:/ ]$ nslookup kubernetes

Server: 10.96.0.114

Address 1: 10.96.0.114 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.114 kubernetes.default.svc.cluster.local

[ root@curl-87b54756-qf7l9:/ ]$ nslookup nginx

Server: 10.96.0.114

Address 1: 10.96.0.114 kube-dns.kube-system.svc.cluster.local

Name: nginx

Address 1: 10.96.93.85 nginx.default.svc.cluster.local

[ root@curl-87b54756-qf7l9:/ ]$ curl nginx

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

...

[ root@curl-87b54756-qf7l9:/ ]$ exit

Session ended, resume using 'kubectl attach curl-87b54756-qf7l9 -c curl -i -t' command when the pod is running

[root@master nginx]# kubectl delete deployment curl

deployment.extensions "curl" deleted

到etcd节点上执行curl nodeIp:31000 测试集群外部是否能访问nginx

curl 192.168.1.2:31000

[root@node5 ~]# curl 192.168.1.2:31000

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

安装ipvsadm查看ipvs规则

#~ yum install -y ipvsadm ipset conntrack 安装ipvs相关包 [root@master ~]# ipvsadm -Ln --statu IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Conns InPkts OutPkts InBytes OutBytes -> RemoteAddress:Port TCP 192.168.1.17:31072 0 0 0 0 0 -> 10.96.66.2:8443 0 0 0 0 0 TCP 10.96.0.1:443 9 405977 405052 25038979 220448K -> 192.168.1.12:6443 5 16550 13449 2639489 2963775 -> 192.168.1.13:6443 2 20 14 1576 4500 -> 192.168.1.14:6443 2 389407 391589 22397914 217480K TCP 10.96.0.114:53 3 18 12 1188 1101 -> 10.96.32.2:53 1 6 4 396 367 -> 10.96.66.4:53 2 12 8 792 734 -> 10.96.66.8:53 0 0 0 0 0 TCP 10.96.3.196:1 0 0 0 0 0 -> 192.168.1.12:1 0 0 0 0 0 -> 192.168.1.13:1 0 0 0 0 0 -> 192.168.1.14:1 0 0 0 0 0 -> 192.168.1.16:1 0 0 0 0 0

部署Dashboard

1、下载 dashboard 镜像 # 个人的镜像 registry.cn-hangzhou.aliyuncs.com/dnsjia/k8s:kubernetes-dashboard-amd64_v1.10.0 2、下载 yaml 文件 curl -O https://soft.8090st.com/kubernetes/dashboard/kubernetes-dashboard.yaml

修改证书

在使用–auto-generate-certificates自动生成证书后,访问dashboard报错:NET::ERR_CERT_INVALID

查看dashboard的日志提示证书未找到,为解决这个问题,将生成好的dashboard.crt和dashboard.key

挂载到容器的/certs下,然后重新发布deployment即可。

CA证书的生成可以参考如下配置

$cd ~ $mkdir certs $ openssl genrsa -des3 -passout pass:x -out dashboard.pass.key 2048 ... $ openssl rsa -passin pass:x -in dashboard.pass.key -out dashboard.key # Writing RSA key $ rm dashboard.pass.key $ openssl req -new -key dashboard.key -out dashboard.csr ... Country Name (2 letter code) [AU]: US ... A challenge password []: ... Generate SSL certificate The self-signed SSL certificate is generated from the dashboard.key private key and dashboard.csr files. $ openssl x509 -req -sha256 -days 365 -in dashboard.csr -signkey dashboard.key -out dashboard.crt 注意: 默认生成证书到期时间为一年, 修改过期时间为10年 -days 3650

将创建的证书拷贝到其他node节点

这里我采取的是hostPath方式挂载,这个需要保证dashboard调度到的node上都要有这个文件;

其他挂载的方式可以参考[官网](https://kubernetes.io/docs/concepts/storage/volumes/)

修改 kubernetes-dashboard.yaml为如下内容

volumes:

- name: kubernetes-dashboard-certs

# secret:

# secretName: kubernetes-dashboard-certs

hostPath:

path: /etc/kubernetes/certs

type: Directory

- name: tmp-volume

emptyDir: {}

默认的token失效时间是900s,也就是说,每隔十五分钟就需要登录一次。为了设置更长的超时时间,可以加入 - --token-ttl=5400 参数

#首先将证书文件拷贝到其他node节点 scp -r /etc/kubernetes/certs 192.168.1.17:/etc/kubernetes/certs scp -r /etc/kubernetes/certs 192.168.1.18:/etc/kubernetes/certs scp -r /etc/kubernetes/certs 192.168.1.19:/etc/kubernetes/certs #在master节点执行如下命令,部署ui kubectl create -f kubernetes-dashboard.yaml

配置Dashboard令牌

vi token.sh #!/bin/bash if kubectl get sa dashboard-admin -n kube-system &> /dev/null;then echo -e "\033[33mWARNING: ServiceAccount dashboard-admin exist!\033[0m" else kubectl create sa dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin fi sh token.sh #生成登录令牌 kubectl describe secret -n kube-system $(kubectl get secrets -n kube-system | grep dashboard-admin | cut -f1 -d ' ') | grep -E '^token' token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tYzlrNXMiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNDJkMjc1NTctZWZkMC0xMWU4LWFmNTgtMDAwYzI5OWJiNzhkIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.HoEtcgtjMbM_DZ8J3w5xq_gZrr1M-C5Axtt_PbGw39TbMqetsk1oCVNUdY5Hv_9z-liC-DBo2O-NO6IvPdrYBjgADwPBgc3fSjrZMfI8gDqwsKDIVF6VXCzaMAy-QeqUh-zgoqZa93MdBaBlhGQXtLyx0kso8XMGQccPndnzjqRw_8gWXNX2Lt5vLkEDTYcBMkqoGuwLJymQVtFVUwBHEHi9VIDgN4j5YV72ZDK320YgyS_nwjqwicyWpkDWq03yWhyJKyPGQ_Z8cylotCKr8jFqxU7oEoX7lfu3SJA19C_ds5Ak0OJi7tMobI59APL-u8xdigvd0MZivsQS0AWDsA

登录dashboard

1、通过node节点ip+端口号访问 kubectl get svc,pod -n kube-system -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kube-dns ClusterIP 10.96.0.114 <none> 53/UDP,53/TCP 2d service/kubelet ClusterIP None <none> 10250/TCP 4d service/kubernetes-dashboard NodePort 10.96.44.102 <none> 443:31072/TCP 2d NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE pod/coredns-5579c78574-dgrsp 1/1 Running 1 2d 10.96.32.2 192.168.1.17 <none> pod/coredns-5579c78574-ss4xb 1/1 Running 1 2d 10.96.66.4 192.168.1.18 <none> pod/coredns-5579c78574-v4bw9 1/1 Running 0 2d 10.96.66.8 192.168.1.18 <none> pod/kubernetes-dashboard-d6cc8d98f-ngbls 1/1 Running 1 2d 10.96.66.2 192.168.1.18 <none>

我们可以看到dashboard pod被调度到了192.168.1.18节点, 暴露端口为31072

https://192.168.1.18:31072

2、配置ingress