阿里云kubeadm 1.18.x高可用集群部署

环境说明

| 系统 | IP | Containerd | Kernel | hostname | 备注 |

|---|---|---|---|---|---|

| Aliyun Linux | 10.118.77.20 | 19.03.6-ce | 4.14.171 | k8s-node-1 | Master |

| Aliyun Linux | 10.118.77.21 | 19.03.6-ce | 4.14.171 | k8s-node-2 | Master or node |

| Aliyun Linux | 10.118.77.22 | 19.03.6-ce | 4.14.171 | k8s-node-3 | Master or node |

初始化环境

配置 hosts

hostnamectl --static set-hostname hostname hostnamectl --transient set-hostname hostname k8s-node-1 10.118.77.20 k8s-node-2 10.118.77.21 k8s-node-3 10.118.77.22

#编辑 /etc/hosts 文件,配置hostname 通信

vi /etc/hosts

10.118.77.20 k8s-node-1

10.118.77.21 k8s-node-2

10.118.77.22 k8s-node-3

关闭防火墙

sed -ri 's#(SELINUX=).*#\1disabled#' /etc/selinux/config

setenforce 0

systemctl disable firewalld

systemctl stop firewalld

关闭虚拟内存

# 临时关闭

swapoff -a

添加内核配置

# 开启内核 namespace 支持 grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)" # 修改内核参数 cat<<EOF > /etc/sysctl.d/docker.conf net.ipv4.ip_forward=1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-arptables = 1 vm.swappiness=0 EOF # 生效配置 sysctl --system # 重启系统 reboot

kubernetes 内核优化

cat<<EOF > /etc/sysctl.d/kubernetes.conf # conntrack 连接跟踪数最大数量 net.netfilter.nf_conntrack_max = 10485760 # 允许送到队列的数据包的最大数目 net.core.netdev_max_backlog = 10000 # ARP 高速缓存中的最少层数 net.ipv4.neigh.default.gc_thresh1 = 80000 # ARP 高速缓存中的最多的记录软限制 net.ipv4.neigh.default.gc_thresh2 = 90000 # ARP 高速缓存中的最多记录的硬限制 net.ipv4.neigh.default.gc_thresh3 = 100000 EOF # 生效配置 sysctl --system

配置IPVS模块

kube-proxy 使用 ipvs 方式负载 ,所以需要内核加载 ipvs 模块, 否则只会使用 iptables 方式

加载ipvs

cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF # 授权可执行权限 chmod 755 /etc/sysconfig/modules/ipvs.modules # 加载模块 bash /etc/sysconfig/modules/ipvs.modules # 查看加载 lsmod | grep -e ip_vs -e nf_conntrack_ipv4 # 输出如下: ----------------------------------------------------------------------- nf_conntrack_ipv4 20480 0 nf_defrag_ipv4 16384 1 nf_conntrack_ipv4 ip_vs_sh 16384 0 ip_vs_wrr 16384 0 ip_vs_rr 16384 0 ip_vs 147456 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr nf_conntrack 110592 2 ip_vs,nf_conntrack_ipv4 libcrc32c 16384 2 xfs,ip_vs -----------------------------------------------------------------------

配置yum源

这里我们使用阿里云的 yum 源

cat << EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF # 更新 yum yum makecache

安装 docker

检查系统

curl -s https://raw.githubusercontent.com/docker/docker/master/contrib/check-config.sh | bash

安装 docker

yum makecache #清除缓存 yum -y install docker #安装docker

# 指定安装,并指定安装源

export VERSION=19.03 curl -fsSL "https://get.docker.com/" | bash -s -- --mirror Aliyun

配置 docker

mkdir -p /etc/docker/

cat>/etc/docker/daemon.json<<EOF

{

"bip": "172.17.0.1/16",

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://9jwx2023.mirror.aliyuncs.com"],

"data-root": "/opt/docker",

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "5"

},

"dns-search": ["default.svc.cluster.local", "svc.cluster.local", "localdomain"],

"dns-opts": ["ndots:2", "timeout:2", "attempts:2"]

}

EOF

启动docker

systemctl enable docker

systemctl start docker

systemctl status docker

docker info

部署 kubernetes

安装相关软件

# kubernetes 相关 (Master)

yum -y install tc kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

# kubernetes 相关 (Node)

yum -y install tc kubelet-1.18.0 kubeadm-1.18.0

# ipvs 相关

yum -y install ipvsadm ipset systemctl enable kubelet

配置 kubectl 命令补全

# 安装 bash-completion

yum -y install bash-completion

# Linux 默认脚本路径为 /usr/share/bash-completion/bash_completion

# 配置 bashrc

vi ~/.bashrc

# 添加如下:

# kubectl

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

# 生效配置

source ~/.bashrc

修改证书期限

- 默认基本证书的有效期为1年

# 下载源码

git clone https://github.com/kubernetes/kubernetes

Cloning into 'kubernetes'...

remote: Enumerating objects: 219, done.

remote: Counting objects: 100% (219/219), done.

remote: Compressing objects: 100% (128/128), done.

remote: Total 1087208 (delta 112), reused 91 (delta 91), pack-reused 1086989

Receiving objects: 100% (1087208/1087208), 668.66 MiB | 486.00 KiB/s, done.

Resolving deltas: 100% (777513/777513), done.

# 查看分支

cd kubernetes

git branch -a

# 查看当前分支

git branch

# 切换到相关的分支

git checkout remotes/origin/release-1.18

# 打开文件

vi staging/src/k8s.io/client-go/util/cert/cert.go

# 如下 默认已经是10年,可不修改,也可以修改99年,但是不能超过100年

NotAfter: now.Add(duration365d * 10).UTC(),

- 修改 constants.go 文件

# 打开文件

vi cmd/kubeadm/app/constants/constants.go

# 如下 默认是 1年, 修改为 10 年

CertificateValidity = time.Hour * 24 * 365

# 修改为

CertificateValidity = time.Hour * 24 * 365 * 10

重新编译 kubeadm

make all WHAT=cmd/kubeadm GOFLAGS=-v

拷贝 覆盖 kubeadm

- 拷贝到所有的 master 中

# 编译后生成目录为 _output/local/bin/linux/amd64

cp _output/local/bin/linux/amd64/kubeadm /usr/bin/kubeadm

cp: overwrite ‘/usr/bin/kubeadm’? y

修改 kubeadm 配置信息

- 打印

kubeadminit 的 yaml 配置

kubeadm config print init-defaults kubeadm config print init-defaults --component-configs KubeletConfiguration kubeadm config print init-defaults --component-configs KubeProxyConfiguration

# 导出 配置 信息

kubeadm config print init-defaults > kubeadm-init.yaml

文中配置的 127.0.0.1 均为后续配置的 Nginx Api 代理ip advertiseAddress: 10.118.77.23 与 bindPort: 5443 为程序绑定的地址与端口controlPlaneEndpoint: "127.0.0.1:6443" 为实际访问 ApiServer 的地址,这里这样配置是为了维持 Apiserver 的HA, 所以每个机器上部署一个 Nginx 做4层代理 ApiServer

配置 Nginx Proxy

# 创建配置目录

mkdir -p /etc/nginx

# 写入代理配置

cat << EOF >> /etc/nginx/nginx.conf

error_log stderr notice;

worker_processes auto;

events {

multi_accept on;

use epoll;

worker_connections 10240;

}

stream {

upstream kube_apiserver {

least_conn;

server 10.118.77.20:5443;

server 10.118.77.21:5443;

server 10.118.77.22:5443;

}

server {

listen 0.0.0.0:6443;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}

EOF

创建系统 systemd.service 文件

cat << EOF >> /etc/systemd/system/nginx-proxy.service

[Unit]

Description=kubernetes apiserver docker wrapper

Wants=docker.socket

After=docker.service

[Service]

User=root

PermissionsStartOnly=true

ExecStart=/usr/bin/docker run -p 127.0.0.1:6443:6443 \\

-v /etc/nginx:/etc/nginx \\

--name nginx-proxy \\

--net=host \\

--restart=on-failure:5 \\

--memory=512M \\

nginx:alpine

ExecStartPre=-/usr/bin/docker rm -f nginx-proxy

ExecStop=/usr/bin/docker stop nginx-proxy

Restart=always

RestartSec=15s

TimeoutStartSec=30s

[Install]

WantedBy=multi-user.target

EOF

启动 Nginx Proxy

# 启动 Nginx

systemctl daemon-reload

systemctl start nginx-proxy

systemctl enable nginx-proxy

systemctl status nginx-proxy

初始化集群

--upload-certs 会在加入 master 节点的时候自动拷贝证书

kubeadm init --config kubeadm-init.yaml --upload-certs

# 输出如下:

Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join 127.0.0.1:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:ed09a75d84bfbb751462262757310d0cf3d015eaa45680130be1d383245354f8 \ --control-plane --certificate-key 93cb0d7b46ba4ac64c6ffd2e9f022cc5f22bea81acd264fb4e1f6150489cd07a Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join 127.0.0.1:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:ed09a75d84bfbb751462262757310d0cf3d015eaa45680130be1d383245354f8

# 拷贝权限文件

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

# 查看集群状态

[root@k8s-node-1 kubeadm]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

加入 kubernetes 集群

如上有 kubeadm init 后有两条 kubeadm join 命令, –control-plane 为 加入 Master

另外token 有时效性,如果提示 token 失效,请自行创建一个新的 token.

kubeadm token create –print-join-command 创建新的 join token

加入 其他 Master 节点

我这里三个服务器都是 Master 节点,所有都加入 –control-plane 的选项

创建审计策略文件

# 其他两台服务器创建

ssh k8s-node-2 "mkdir -p /etc/kubernetes/"

ssh k8s-node-3 "mkdir -p /etc/kubernetes/"

# k8s-node-2 节点

scp /etc/kubernetes/audit-policy.yaml k8s-node-2:/etc/kubernetes/

# k8s-node-3 节点

scp /etc/kubernetes/audit-policy.yaml k8s-node-3:/etc/kubernetes/

分别 执行join master

# 先测试 api server 连通性

curl -k https://127.0.0.1:6443

# 返回如下信息:

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/\"",

"reason": "Forbidden",

"details": {

},

"code": 403

- 增加额外的配置,用于区分不用的 master 中的

apiserver-advertise-address与apiserver-bind-port

# k8s-node-2

kubeadm join 127.0.0.1:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:ed09a75d84bfbb751462262757310d0cf3d015eaa45680130be1d383245354f8 \

--control-plane --certificate-key 93cb0d7b46ba4ac64c6ffd2e9f022cc5f22bea81acd264fb4e1f6150489cd07a \

--apiserver-advertise-address 10.18.77.117 \

--apiserver-bind-port 5443

# k8s-node-3

kubeadm join 127.0.0.1:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:ed09a75d84bfbb751462262757310d0cf3d015eaa45680130be1d383245354f8 \

--control-plane --certificate-key 93cb0d7b46ba4ac64c6ffd2e9f022cc5f22bea81acd264fb4e1f6150489cd07a \

--apiserver-advertise-address 10.18.77.218 \

--apiserver-bind-port 5443

拷贝 config 配置文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

验证 Master 节点

这里 STATUS 显示 NotReady 是因为 没有安装网络组件

# 查看 node

[root@k8s-node-1 kubeadm]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node-1 NotReady master 106m v1.18.0

k8s-node-2 NotReady master 2m18s v1.18.0

k8s-node-3 NotReady master 63s v1.18.0

配置 Master to node

- 这里主要是让 master 直接可以运行 pods

执行命令:kubectl taint node node-name node-role.kubernetes.io/master-禁止 master 运行podkubectl taint nodes node-name node-role.kubernetes.io/master=:NoSchedule增加ROLES标签:kubectl label nodes localhost node-role.kubernetes.io/node=删除ROLES标签:kubectl label nodes localhost node-role.kubernetes.io/node-ROLES标签可以添加任意的值, 如:kubectl label nodes localhost node-role.kubernetes.io/ziji.work=

部署 Node 节点

- node 节点, 直接

join就可以

kubeadm join 127.0.0.1:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:ed09a75d84bfbb751462262757310d0cf3d015eaa45680130be1d383245354f8

# 输出如下:

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

验证 所有 节点

这里 STATUS 显示 NotReady 是因为 没有安装网络组件

执行kubectl get nodes 查看node是否加入成功

[root@k8s-node-1 yaml]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node-1 NotReady master 106m v1.18.0

k8s-node-2 NotReady master 2m18s v1.18.0

k8s-node-3 NotReady master 63s v1.18.0

k8s-node-4 NotReady <none> 2m46s v1.18.0

k8s-node-5 NotReady <none> 2m46s v1.18.0

k8s-node-6 NotReady <none> 2m46s v1.18.0

查看验证证书

- 这里如果后续替换的话, 所有 master 节点都需要执行如下更新命令

# 更新证书

kubeadm alpha certs renew all

# 查看证书时间

kubeadm alpha certs check-expiration

[root@k8s-node-1 kubeadm]# kubeadm alpha certs check-expiration

CERTIFICATE EXPIRES RESIDUAL TIME CERTIFICATE AUTHORITY EXTERNALLY MANAGED

admin.conf Mar 07, 2119 06:22 UTC 98y no

apiserver Mar 07, 2119 06:22 UTC 98y ca no

apiserver-etcd-client Mar 07, 2119 06:22 UTC 98y etcd-ca no

apiserver-kubelet-client Mar 07, 2119 06:22 UTC 98y ca no

controller-manager.conf Mar 07, 2119 06:22 UTC 98y no

etcd-healthcheck-client Mar 07, 2119 06:22 UTC 98y etcd-ca no

etcd-peer Mar 07, 2119 06:22 UTC 98y etcd-ca no

etcd-server Mar 07, 2119 06:22 UTC 98y etcd-ca no

front-proxy-client Mar 07, 2119 06:22 UTC 98y front-proxy-ca no

scheduler.conf Mar 07, 2119 06:22 UTC 98y no

CERTIFICATE AUTHORITY EXPIRES RESIDUAL TIME EXTERNALLY MANAGED

ca Mar 28, 2030 04:30 UTC 9y no

etcd-ca Mar 28, 2030 04:30 UTC 9y no

front-proxy-ca Mar 28, 2030 04:30 UTC 9y no

安装网络组件

Flannel 网络组件

下载 Flannel yaml

# 下载 yaml 文件

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

修改 Flannel 配置

这里只需要修改 分配的 CIDR 就可以

vi kube-flannel.yml

# 修改 pods 分配的 IP 段, 与模式 vxlan

# "Type": "vxlan" , 云上一般都不支持 host-gw 模式,一般只用于 2层网络。

# 主要是如下部分

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.253.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

# 导入 yaml 文件

[root@k8s-node-1 flannel]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

# 查看服务

[root@k8s-node-1 flannel]# kubectl get pods -n kube-system -o wide |grep kube-flannel kube-flannel-ds-amd64-2tw6q 1/1 Running 0 88s 10.18.77.61 k8s-node-1 <none> <none> kube-flannel-ds-amd64-8nrtd 1/1 Running 0 88s 10.18.77.218 k8s-node-3 <none> <none> kube-flannel-ds-amd64-frmk9 1/1 Running 0 88s 10.18.77.117 k8s-node-2 <none> <none>

优化 Coredns 配置

- 根据 node 情况增加

replicas数量 - 最好可以 约束 coredns 的 pod 调度到不同的 node 中。

kubectl edit deploy coredns -n kube-system

kubectl scale deploy/coredns --replicas=3 -n kube-system

- 使用

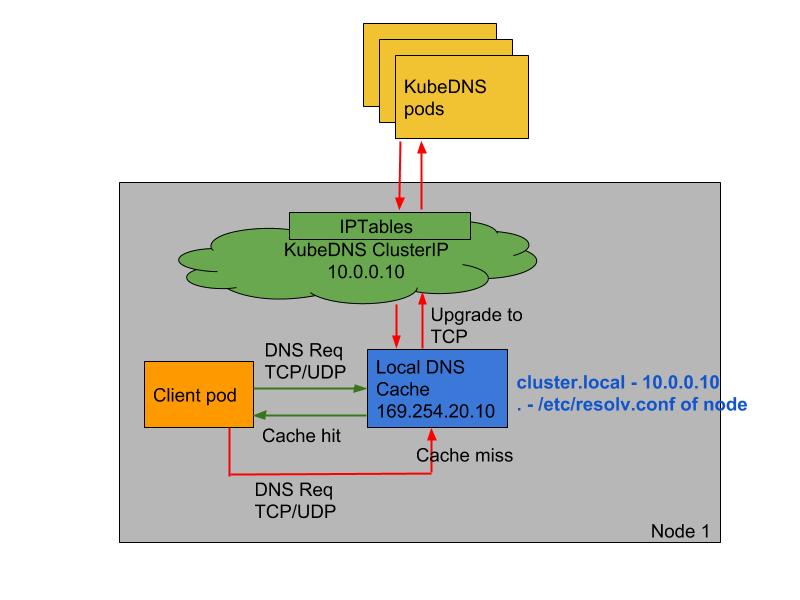

NodeLocal DNSCache 官方文档https://kubernetes.io/zh/docs/tasks/administer-cluster/nodelocaldns/NodeLocal DNSCache– 通过在集群节点上作为DaemonSet运行 dns 缓存代理来提高集群 DNS 性能。NodeLocal DNSCache– 集群中的 Pods 将可以访问在同一节点上运行的 dns 缓存代理,从而避免了iptables DNAT规则和连接跟踪。 本地缓存代理将查询 kube-dns 服务以获取集群主机名的缓存缺失(默认为 cluster.local 后缀)。NodeLocal DNSCache架构图

部署 NodeLocal DNSCache

建议在kubeadm init阶段以后就配置整体 dns- 如果在旧的集群部署

NodeLocal DNSCache原来的所有应用组件建议重新部署,包括网络组建, 否则会遇到很多莫名其妙问题。

# 下载 YAML

wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/dns/nodelocaldns/nodelocaldns.yaml

# 修改配置

sed -i 's/k8s\.gcr\.io/zijiwork/g' nodelocaldns.yaml

sed -i 's/__PILLAR__LOCAL__DNS__/10\.254\.0\.10/g' nodelocaldns.yaml

sed -i 's/__PILLAR__DNS__SERVER__/169\.254\.20\.10/g' nodelocaldns.yaml

sed -i 's/__PILLAR__DNS__DOMAIN__/cluster\.local/g' nodelocaldns.yaml

# __PILLAR__DNS__SERVER__ -设置为 coredns svc 的 IP。

# __PILLAR__LOCAL__DNS__ -设置为本地链接IP(默认为169.254.20.10)。

# __PILLAR__DNS__DOMAIN__ -设置为群集域(默认为cluster.local)。

# 创建服务

[root@k8s-node-1 kubeadm]# kubectl apply -f nodelocaldns.yaml

# 查看服务

[root@k8s-node-1 kubeadm]# kubectl get pods -n kube-system |grep node-local-dns

node-local-dns-mfxdk 1/1 Running 0 3m12s

[root@k8s-node-1 kubeadm]# kubectl get svc -n kube-system kube-dns-upstream

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns-upstream ClusterIP 10.254.45.66 <none> 53/UDP,53/TCP 23m

# 查看本地开放端口

[root@k8s-node-1 kubeadm]# netstat -lan|grep 169.254.20.10

tcp 0 0 169.254.20.10:53 0.0.0.0:* LISTEN

udp 0 0 169.254.20.10:53 0.0.0.0:*

修改 kubelet 使用 NodeLocal DNSCache

- kubeadm 部署的集群, kubelet 的配置在 /var/lib/kubelet/config.yaml 中

vi /var/lib/kubelet/config.yaml

# 修 改

clusterDNS:

- 10.254.0.10

# 修改为 本机 ip

clusterDNS:

- 169.254.20.10

重启 kubelet

- 这里也可以在 kubeadm init 的阶段就配置好 NodeLocal 的ip

# 重启 kubelet 应用dns

systemctl daemon-reload && systemctl restart kubelet

验整体集群

查看 状态

所有的 STATUS 都为 Ready

[root@k8s-node-1 flannel]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node-1 Ready master 131m v1.18.0

k8s-node-2 Ready master 27m v1.18.0

k8s-node-3 Ready master 26m v1.18.0

查看 pods 状态

[root@k8s-node-1 flannel]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-546565776c-9zbqz 1/1 Running 0 137m

kube-system coredns-546565776c-lz5fs 1/1 Running 0 137m

kube-system etcd-k8s-node-1 1/1 Running 0 138m

kube-system etcd-k8s-node-2 1/1 Running 0 34m

kube-system etcd-k8s-node-3 1/1 Running 0 33m

kube-system kube-apiserver-k8s-node-1 1/1 Running 0 138m

kube-system kube-apiserver-k8s-node-2 1/1 Running 0 34m

kube-system kube-apiserver-k8s-node-3 1/1 Running 0 33m

kube-system kube-controller-manager-k8s-node-1 1/1 Running 1 138m

kube-system kube-controller-manager-k8s-node-2 1/1 Running 0 34m

kube-system kube-controller-manager-k8s-node-3 1/1 Running 0 33m

kube-system kube-flannel-ds-amd64-2tw6q 1/1 Running 0 9m11s

kube-system kube-flannel-ds-amd64-8nrtd 1/1 Running 0 9m11s

kube-system kube-flannel-ds-amd64-frmk9 1/1 Running 0 9m11s

kube-system kube-proxy-9qv4l 1/1 Running 0 34m

kube-system kube-proxy-f29dk 1/1 Running 0 137m

kube-system kube-proxy-zgjnf 1/1 Running 0 33m

kube-system kube-scheduler-k8s-node-1 1/1 Running 1 138m

kube-system kube-scheduler-k8s-node-2 1/1 Running 0 34m

kube-system kube-scheduler-k8s-node-3 1/1 Running 0 33m

查看 svc 的状态

[root@k8s-node-1 flannel]# kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 138m

kube-system kube-dns ClusterIP 10.254.0.10 <none> 53/UDP,53/TCP,9153/TCP 138m

查看 IPVS 的状态

[root@k8s-node-1 flannel]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 wrr

-> 10.18.77.61:5443 Masq 1 2 0

-> 10.18.77.117:5443 Masq 1 0 0

-> 10.18.77.218:5443 Masq 1 0 0

TCP 10.254.0.10:53 wrr

-> 10.254.64.3:53 Masq 1 0 0

-> 10.254.65.4:53 Masq 1 0 0

TCP 10.254.0.10:9153 wrr

-> 10.254.64.3:9153 Masq 1 0 0

-> 10.254.65.4:9153 Masq 1 0 0

TCP 10.254.28.93:80 wrr

-> 10.254.65.5:80 Masq 1 0 1

-> 10.254.66.3:80 Masq 1 0 2

UDP 10.254.0.10:53 wrr

-> 10.254.64.3:53 Masq 1 0 0

-> 10.254.65.4:53 Masq 1 0 0

测试集群

创建一个 nginx deployment

# 导入文件

[root@k8s-node-1 kubeadm]# kubectl apply -f nginx-deployment.yaml

deployment.apps/nginx-dm created

service/nginx-svc created

# 查看服务

[root@k8s-node-1 kubeadm]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dm-8665b6b679-lf72f 1/1 Running 0 37s

nginx-dm-8665b6b679-mqn5f 1/1 Running 0 37s

# 查看 svc

[root@k8s-node-1 kubeadm]# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 146m <none>

nginx-svc ClusterIP 10.254.23.158 <none> 80/TCP 54s name=nginx

访问 svc

# node-1 访问 svc

[root@k8s-node-1 yaml]# curl 10.254.28.93

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

# 查看 ipvs 规则

[root@k8s-node-1 yaml]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 wrr

-> 10.18.77.61:5443 Masq 1 2 0

-> 10.18.77.117:5443 Masq 1 0 0

-> 10.18.77.218:5443 Masq 1 0 0

TCP 10.254.0.10:53 wrr

-> 10.254.64.3:53 Masq 1 0 0

-> 10.254.65.4:53 Masq 1 0 0

TCP 10.254.0.10:9153 wrr

-> 10.254.64.3:9153 Masq 1 0 0

-> 10.254.65.4:9153 Masq 1 0 0

TCP 10.254.28.93:80 wrr

-> 10.254.65.5:80 Masq 1 0 10

-> 10.254.66.3:80 Masq 1 0 10

UDP 10.254.0.10:53 wrr

-> 10.254.64.3:53 Masq 1 0 0

-> 10.254.65.4:53 Masq 1 0 0

验证 dns 的服务

# 测试

[root@k8s-node-1 kubeadm]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dm-8665b6b679-28zbw 1/1 Running 0 7m54s

nginx-dm-8665b6b679-h5rhn 1/1 Running 0 7m54s

# kubernetes 服务

[root@k8s-node-1 kubeadm]# kubectl exec -it nginx-dm-8665b6b679-28zbw -- nslookup kubernetes

nslookup: can't resolve '(null)': Name does not resolve

Name: kubernetes

Address 1: 10.254.0.1 kubernetes.default.svc.cluster.local

# nginx-svc 服务

[root@k8s-node-1 kubeadm]# kubectl exec -it nginx-dm-8665b6b679-28zbw -- nslookup nginx-svc

nslookup: can't resolve '(null)': Name does not resolve

Name: nginx-svc

Address 1: 10.254.27.199 nginx-svc.default.svc.cluster.local

部署 Metrics-Server

Metrics-Server 说明

v1.11 以后不再支持通过 heaspter 采集监控数据,支持新的监控数据采集组件metrics-server,比heaspter轻量很多,也不做数据的持久化存储,提供实时的监控数据查询。

创建 Metrics-Server 文件

# 导入服务

[root@k8s-node-1 metrics]# kubectl apply -f metrics-server.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

serviceaccount/metrics-server created

serviceaccount/metrics-server unchanged

deployment.apps/metrics-server created

service/metrics-server created

查看服务

[root@k8s-node-1 metrics]# kubectl get pods -n kube-system |grep metrics

metrics-server-7b5b7fd65-v8sqc 1/1 Running 0 11s

测试采集

- 提示 error: metrics not available yet , 请等待一会采集后再查询

- 查看

pods的信息

[root@k8s-node-1 metrics]# kubectl top pods -n kube-system

NAME CPU(cores) MEMORY(bytes)

coredns-546565776c-9zbqz 2m 5Mi

coredns-546565776c-lz5fs 2m 5Mi

etcd-k8s-node-1 27m 75Mi

etcd-k8s-node-2 25m 76Mi

etcd-k8s-node-3 23m 75Mi

kube-apiserver-k8s-node-1 21m 272Mi

kube-apiserver-k8s-node-2 19m 277Mi

kube-apiserver-k8s-node-3 23m 279Mi

kube-controller-manager-k8s-node-1 12m 37Mi

kube-controller-manager-k8s-node-2 2m 12Mi

kube-controller-manager-k8s-node-3 2m 12Mi

kube-flannel-ds-amd64-f2ck7 2m 8Mi

kube-flannel-ds-amd64-g6tp6 2m 8Mi

kube-flannel-ds-amd64-z2cvb 2m 9Mi

kube-proxy-9qv4l 12m 9Mi

kube-proxy-f29dk 11m 9Mi

kube-proxy-zgjnf 10m 9Mi

kube-scheduler-k8s-node-1 3m 9Mi

kube-scheduler-k8s-node-2 2m 8Mi

kube-scheduler-k8s-node-3 2m 10Mi

metrics-server-7ff8dccd5b-jsjkk 2m 13Mi

查看 node 信息

[root@k8s-node-1 metrics]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-node-1 110m 5% 1100Mi 28%

k8s-node-2 97m 4% 1042Mi 27%

k8s-node-3 94m 4% 1028Mi 26%

部署 Nginx ingress

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.34.1/deploy/static/provider/cloud/deploy.yaml

# 镜像下载地址

image: us.gcr.io/k8s-artifacts-prod/ingress-nginx/controller:v0.34.1@sha256:0e072dddd1f7f8fc8909a2ca6f65e76c5f0d2fcfb8be47935ae3457e8bbceb20

# 替换为

image: zijiwork/controller:v0.34.1

# 修改如下部分:

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

# 如下部分:

type: LoadBalancer

externalTrafficPolicy: Local

# 修改为

type: ClusterIP

externalTrafficPolicy: Local

# Deployment 部分

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

# 配置 node affinity

# 配置 hostNetwork

# 配置 dnsPolicy: ClusterFirstWithHostNet

# 在 如下之间添加

spec:

dnsPolicy: ClusterFirst

# 修改为如下:

spec:

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

apply 导入 文件

[root@k8s-node-1 ingress]# kubectl apply -f deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

查看服务状态

[root@k8s-node-1 ingress]# kubectl get pods,svc -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-dgg76 0/1 Completed 0 114s

pod/ingress-nginx-admission-patch-f65qj 0/1 Completed 1 114s

pod/ingress-nginx-controller-5f4cb6d6f4-pft6x 1/1 Running 0 2m4s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller ClusterIP 10.99.155.236 <none> 80/TCP,443/TCP 2m4s

service/ingress-nginx-controller-admission ClusterIP 10.102.95.56 <none> 443/TCP 2m4s

测试 ingress

# 查看之前创建的 Nginx

[root@k8s-node-1 ingress]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 74m

nginx-svc ClusterIP 10.254.52.255 <none> 80/TCP 19m

# 创建一个 nginx-svc 的 ingress

vi nginx-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-ingress

spec:

rules:

- host: nginx.ziji.work

http:

paths:

- backend:

serviceName: nginx-svc

servicePort: 80

# 导入 yaml

[root@k8s-node-1 kubeadm]# kubectl apply -f nginx-ingress.yaml

ingress.extensions/nginx-ingress created

# 查看 ingress

[root@k8s-node-1 kubeadm]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

nginx-ingress <none> nginx.ziji.work 80 34s

测试访问

[root@k8s-node-1 kubeadm]# curl -I nginx.ziji.work

HTTP/1.1 200 OK

Server: nginx/1.17.8

Date: Mon, 30 Mar 2020 08:54:56 GMT

Content-Type: text/html

Content-Length: 612

Connection: keep-alive

Vary: Accept-Encoding

Last-Modified: Tue, 03 Mar 2020 17:36:53 GMT

ETag: "5e5e95b5-264"

Accept-Ranges: bytes

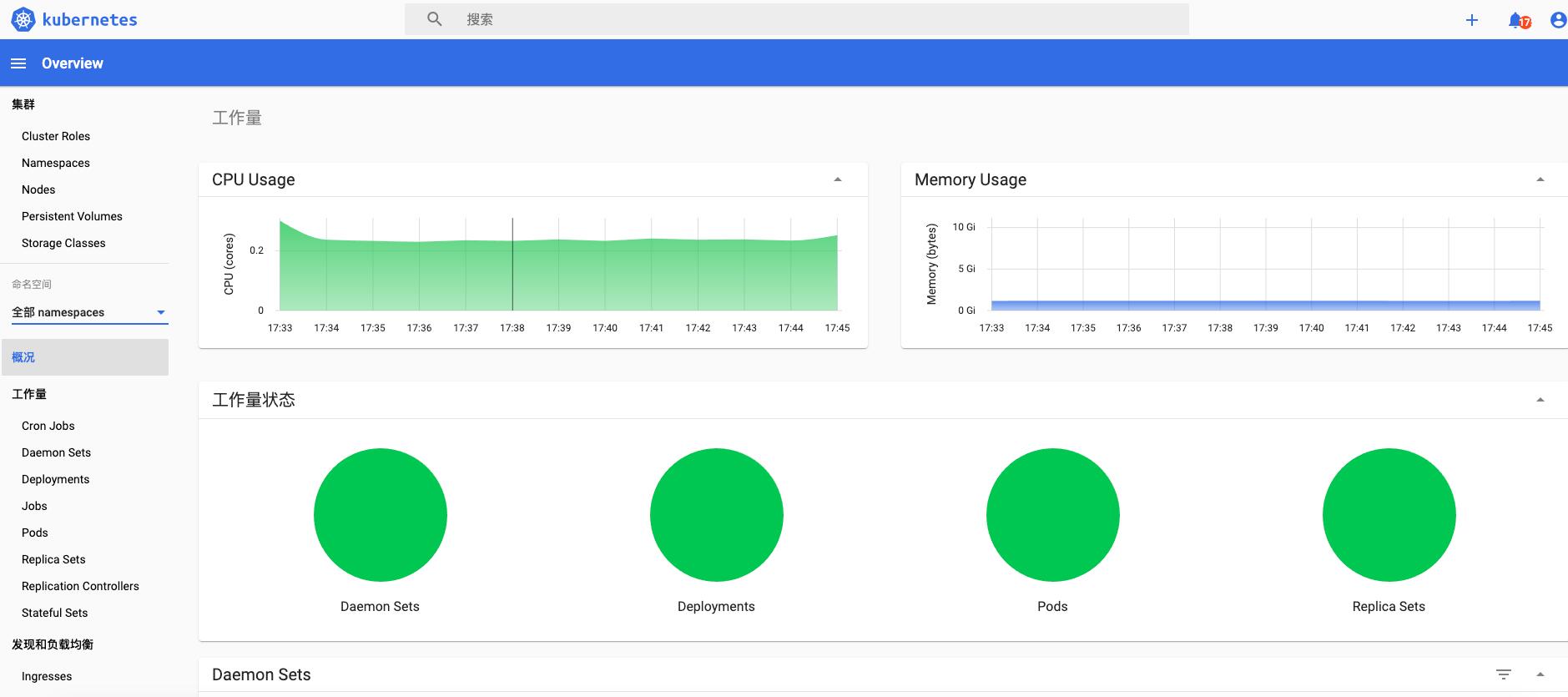

部署 Dashboard

Dashboard 是 Kubernetes 集群的 通用 WEB UI 它允许用户管理集群中运行的应用程序并对其进行故障排除,以及管理集群本身。

# 下载 yaml 文件

https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc6/aio/deploy/recommended.yaml

[root@k8s-node-1 dashboard]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

查看服务状态

[root@k8s-node-1 dashboard]# kubectl get pods -n kubernetes-dashboard |grep dashboard

dashboard-metrics-scraper-779f5454cb-8m5p5 1/1 Running 0 19s

kubernetes-dashboard-64686c4bf9-bwvvj 1/1 Running 0 19s

# svc 服务

[root@k8s-node-1 dashboard]# kubectl get svc -n kubernetes-dashboard |grep dashboard

dashboard-metrics-scraper ClusterIP 10.254.39.66 <none> 8000/TCP 43s

kubernetes-dashboard ClusterIP 10.254.53.202 <none> 443/TCP 44s

暴露公网

访问 kubernetes 服务,既暴露 kubernetes 内的端口到 外网,有很多种方案

- LoadBlancer ( 支持的公有云服务的负载均衡 )

- NodePort (映射所有 node 中的某个端口,暴露到公网中)

- Ingress ( 支持反向代理软件的对外服务, 如: Nginx , HAproxy 等)

部署好 dashboard 以后会生成一个 自签的证书

kubernetes-dashboard-certs后面 ingress 会使用到这个证书

[root@k8s-node-1 dashboard]# kubectl get secret -n kubernetes-dashboard

NAME TYPE DATA AGE

default-token-nnn5x kubernetes.io/service-account-token 3 6m32s

kubernetes-dashboard-certs Opaque 0 6m32s

kubernetes-dashboard-csrf Opaque 1 6m32s

kubernetes-dashboard-key-holder Opaque 2 6m32s

kubernetes-dashboard-token-7plmf kubernetes.io/service-account-token 3 6m32s

# 创建 dashboard ingress

# 这里面 annotations 中的 backend 声明,从 v0.21.0 版本开始变更, 一定注意

# nginx-ingress < v0.21.0 使用 nginx.ingress.kubernetes.io/secure-backends: "true"

# nginx-ingress > v0.21.0 使用 nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

# 创建 ingress 文件

vi dashboard-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

annotations:

ingress.kubernetes.io/ssl-passthrough: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

spec:

tls:

- hosts:

- dashboard.ziji.work

secretName: kubernetes-dashboard-certs

rules:

- host: dashboard.ziji.work

http:

paths:

- path: /

backend:

serviceName: kubernetes-dashboard

servicePort: 443

# 导入 yaml

[root@k8s-node-1 dashboard]# kubectl apply -f dashboard-ingress.yaml

ingress.extensions/kubernetes-dashboard created

# 查看 ingress

[root@k8s-node-1 dashboard]# kubectl get ingress -n kubernetes-dashboard

NAME CLASS HOSTS ADDRESS PORTS AGE

kubernetes-dashboard <none> dashboard.ziji.work 80, 443 2m53s

测试访问

[root@k8s-node-1 dashboard]# curl -I -k https://dashboard.ziji.work

HTTP/2 200

server: nginx/1.17.8

date: Mon, 30 Mar 2020 09:41:02 GMT

content-type: text/html; charset=utf-8

content-length: 1287

vary: Accept-Encoding

accept-ranges: bytes

cache-control: no-store

last-modified: Fri, 13 Mar 2020 13:43:54 GMT

strict-transport-security: max-age=15724800; includeSubDomains

令牌 登录认证

# 创建一个 dashboard rbac 超级用户

vi dashboard-admin-rbac.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-admin

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kubernetes-dashboard

# 导入文件

[root@k8s-node-1 dashboard]# kubectl apply -f dashboard-admin-rbac.yaml

serviceaccount/kubernetes-dashboard-admin created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-admin created

# 查看 secret

[root@k8s-node-1 dashboard]# kubectl get secret -n kubernetes-dashboard | grep kubernetes-dashboard-admin

kubernetes-dashboard-admin-token-9dkg4 kubernetes.io/service-account-token 3 38s

# 查看 token 部分

[root@k8s-node-1 dashboard]# kubectl describe -n kubernetes-dashboard secret/kubernetes-dashboard-admin-token-9dkg4

Name: kubernetes-dashboard-admin-token-9dkg4

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard-admin

kubernetes.io/service-account.uid: aee23b33-43a4-4fb4-b498-6c2fb029d63c

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlI4UlpGcTcwR2hkdWZfZWk1X0RUcVI5dkdraXFnNW8yYUV1VVRPQlJYMEkifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi05ZGtnNCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImFlZTIzYjMzLTQzYTQtNGZiNC1iNDk4LTZjMmZiMDI5ZDYzYyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.oyvo_bIM0Ukbs3ov8XbmJffpdK1nec7oKJBxu8V4vesPY_keQhNS9xiAw6zdF2Db2tiEzcpmN3SAgwGjfid5rlSQxGpNK3mkp1r60WSAhyU5e7RqwA9xRO-EtCZ2akrqFKzEn4j_7FGwbKbNsdRurDdOLtKU5KvFsFh5eRxvB6PECT2mgSugfHorrI1cYOw0jcQKE_hjVa94xUseYX12PyGQfoUyC6ZhwIBkRnCSNdbcb0VcGwTerwysR0HFvozAJALh_iOBTDYDUNh94XIRh2AHCib-KVoJt-e2jUaGH-Z6yniLmNr15q5xLfNBd1qPpZHCgoJ1JYz4TeF6udNxIA

FAQ

Failed to get system container stats for “/system.slice/docker.service”: failed to get cgroup stats

kubernetes 版本与 docker 版本不兼容导致的问题

# 打开10-kuberadm.conf 文件

vi /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

# 添加如下:

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=systemd --runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice"

修改 node 名称

vi /var/lib/kubelet/kubeadm-flags.env

# 修改其中的 --hostname-override= 变量

# 重启 kubelet

systemctl daemon-reload

systemctl restart kubelet

# 删除旧的 node

kubectl delete no nod-name

# 查看 csr

[root@k8s-node-1 kubeadm]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-nzhlq 17s kubernetes.io/kube-apiserver-client-kubelet system:node:localhost Pending

# 通过 csr

[root@k8s-node-1 kubeadm]# kubectl certificate approve csr-nzhlq

# 通过以后再查看 node

[root@k8s-node-1 kubeadm]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node-1 NotReady <none> 8s v1.18.0

# 需要等待一段时间等待状态

[root@k8s-node-1 kubeadm]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node-1 Ready <none> 63s v1.18.0